Air Force Integrated Baseline

Review (IBR) Process Guide

Version 3.0 20 September 2012

This is a process guide for performing incremental Integrated Baseline Reviews (IBRs). The document

details the process and contains sample forms and templates to plan and execute an incremental IBR.

Integrated Baseline Review Process

1 Version 3.0, 20 September 2012

Contents

Air Force Integrated Baseline Review (IBR) Process ................................................................................... 4

1. Background Information ................................................................................................................... 4

1.1. Organization of Document ........................................................................................................ 4

1.2. Purpose and Benefits of IBR ..................................................................................................... 4

1.3. Current IBR Guidance and Process Documentation ................................................................. 6

2. Introduction to Revised Air Force Process ....................................................................................... 7

2.1. Objectives of the Revised Air Force Process ............................................................................ 7

2.2. Incremental / Phased Approach ................................................................................................ 7

2.3. Benefits of Revised Air Force Process .................................................................................... 11

3. Sequential Description of IBR Activities ........................................................................................ 12

3.1. RFP Preparation / Pre-Award Activities ................................................................................. 12

3.2. Immediate Post Award Activities ........................................................................................... 16

3.3. IBR Phase I Artifact Quality and Data Integration ................................................................. 18

3.4. IBR Phase II – CAM and Business Office Discussions .......................................................... 20

3.5. IBR Exit Briefing and Follow-up ............................................................................................ 23

4. Detailed / Specific IBR Procedures ................................................................................................ 27

4.1. Organizing the Air Force IBR Team ....................................................................................... 27

4.2. Scoping the Performance Measurement Baseline ................................................................... 27

4.3. Training Throughout the IBR Process .................................................................................... 28

4.4. Artifact Adjustment During the IBR Process .......................................................................... 29

4.5. Integrating IBR Identified Risks into Program Risk Management ......................................... 30

4.6. Treatment of Opportunities ..................................................................................................... 32

4.7. Managing the Air Force IBR Process ..................................................................................... 33

4.7.1. Documentation Requirements ............................................................................................. 33

4.7.2. IBR Scoring ........................................................................................................................ 33

5. Summary ......................................................................................................................................... 39

Integrated Baseline Review Process

2 Version 3.0, 20 September 2012

6. Templates and Samples (Appendices) ............................................................................................ 40

6.1. Sample IBR Schedule (MS Project File) ................................................................................ 41

6.2. Sample IBR Program Event for IMP ...................................................................................... 42

6.3. Sample Notification Letter ...................................................................................................... 43

6.4. Sample Artifact List ................................................................................................................ 44

6.5. Sample Data Call Request ....................................................................................................... 50

6.6. Sample Document Trace Narratives, Integration Proofs and Mapping .................................. 52

6.7. Sample Readiness Review Template ...................................................................................... 59

6.8. Sample Business Office / CAM Discussion Questions ........................................................... 60

6.9. Sample CAM Checklist .......................................................................................................... 65

6.10. Sample CAM Scoring Criteria ............................................................................................ 68

6.11. Sample CAM Discussion Summary ................................................................................... 74

6.12. Sample Action Tracker / Sample Action item List ............................................................. 76

6.13. Sample IBR Exit Briefing ................................................................................................... 77

6.14. Sample Memo for the Record ............................................................................................. 79

6.15. Acronyms ............................................................................................................................ 80

Integrated Baseline Review Process

3 Version 3.0, 20 September 2012

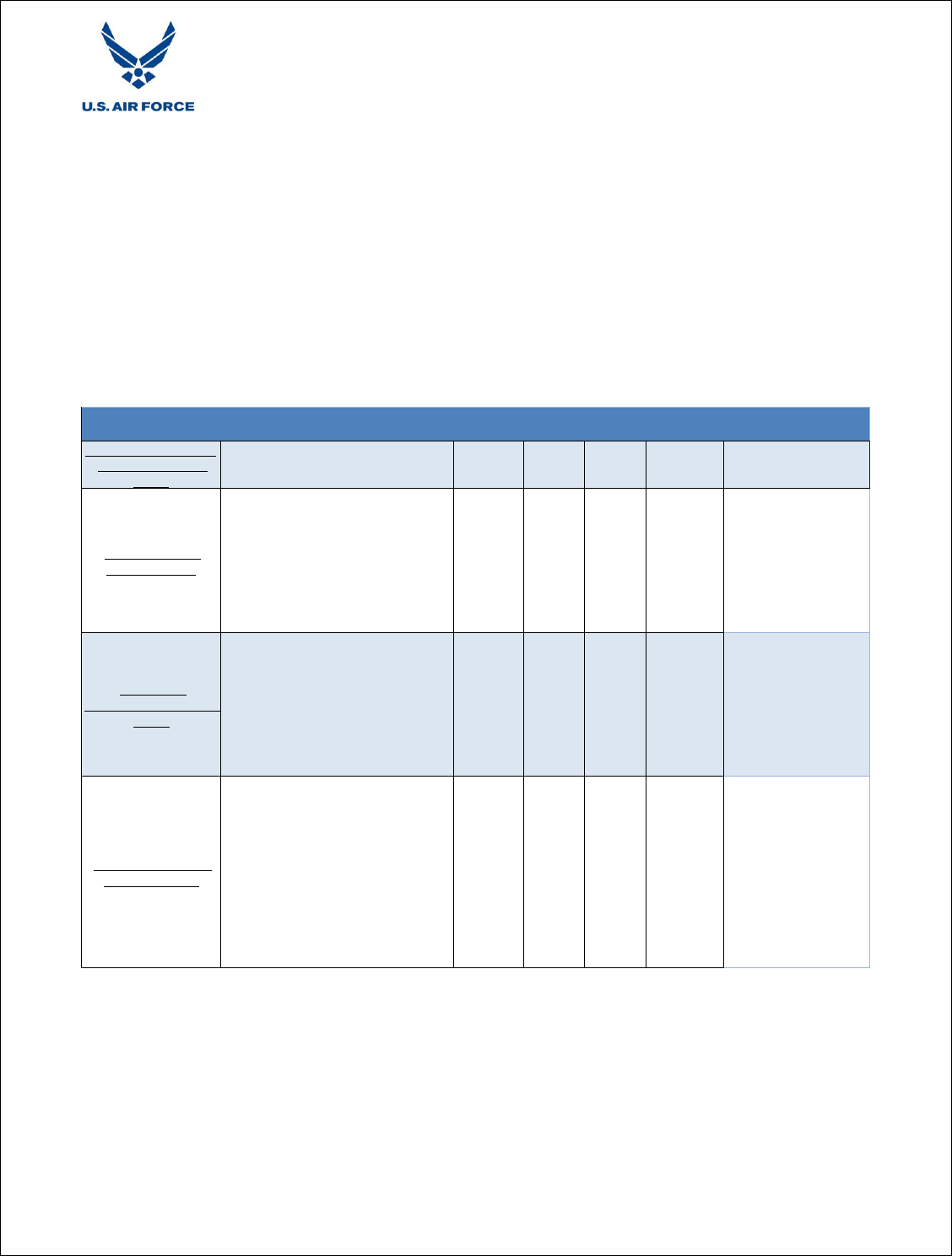

Document Configuration Management Log

Versions

Content Summary / Change Description

1.0 - 31 March 2011

Initial Breakout of the IBR Process Document into separate volumes

(Process Document and Workbook Guide) Pending Changes – Update to

reflect the pilot process.

1.1 - 13 May 2011

Update of Document to make changes necessary to complete the pilot

process. Pending Change – Document will be completely revised

following feedback from the initial pilot

2.0 - 12 January 2012

Update of Document and templates to reflect lessons learned from pilot

and to create a single document with templates and samples and eliminate

the workbook. Pending Change – Updates from users.

3.0 – 20 September

2012

Update document for lessons learned in tailoring artifacts, artifact

integration, and risk management interfaces.

Integrated Baseline Review Process

4 Version 3.0, 20 September 2012

Air Force Integrated Baseline Review (IBR) Process

1. Background Information

This document has been approved for, but not mandated for use. Please contact SAF/AQXC prior to use

and provide feedback from process use. This document incorporates revisions resulting from the initial

pilot.

The IBR process began a pilot in late March 2011 on the KC-46 Tanker Program. SAF/AQXC personnel

(authors of the process) were acting as advisors to the KC-46 Program Office as they went through this

process. In August 2011, the IBR pilot was completed. The incremental approach defined by this IBR

process was well received by both the contractor and Government Program Office. Several updates were

made to the process early in the process planning. The process originally drafted called for a number of

integration meetings organized by IBR risk area to validate the artifacts (documents) that define the

program baseline. Early planning indicated that some of the meetings could be consolidated into fewer

meetings. In addition, the integration of the five risk areas is now one process rather than individual

integration meetings. The IBR process has two phases. The first phase, concentrates on the documents

that represent the baseline, and the second phase focuses on contractor business office and Control

Account Manager (CAM) / Integrated Product Team (IPT) Lead discussions to ensure participants have a

thorough understanding of the program baseline.

The number of artifacts and integration points in the original IBR process were considerable. During early

planning, some artifacts were consolidated with their parent documents, such as including the Critical

Path as part of the Integrated Master Schedule (IMS) rather than a separate document. The current

number of artifacts has been reduced depending upon the acquisition phase of the program. A draft MS

Excel Workbook was provided with the original draft. The Workbook is replaced by a series of templates

and sample documents provided as appendices to this document.

This document will be updated based on the feedback from other users.

1.1. Organization of Document

This document contains several major sections:

Section 1 – Provides background and overview information

Section 2 – Provides an introduction to the Revised Air Force IBR Process

Section 3 – Provides a sequential description of IBR activities

Section 4 – Provides detailed, specific IBR procedures

Section 5 – Provides process summary

Section 6 – Provides templates and sample forms for executing an IBR

1.2. Purpose and Benefits of IBR

The IBR is an essential program management tool for identifying, quantifying, and mitigating risks when

executing complex weapons system and information technology projects. The IBR concept was

Integrated Baseline Review Process

5 Version 3.0, 20 September 2012

developed in 1993 and published in DoD 5000.2-R due to a growing recognition within the Department

of Defense that unrealistic contract baselines were established, leading to significant cost and schedule

overruns and / or under-performance on technical objectives.

The IBR’s purpose is to develop a common understanding between the Air Force Program Management

Office (PMO) and the contractor PMO regarding the project’s baseline and the project’s technical,

schedule, cost, resources, and management process risks and impacts. The PMO cannot reduce risks

unless it first identifies them. The IBR helps to identify risks and opportunities and provides a means for

assessing their severity in a standardized and transparent fashion. The PMO and other stakeholders then

use the IBR’s results to make management decisions that consider cost, schedule, and technical tradeoffs.

These decisions include re-defining the program requirements or objectives, developing risk handling

plans, prioritizing where and when to apply resources, and other means to achieve an executable and

realistic program baseline.

Historically, IBRs were conducted without regard for a standard process. The lack of a quantifiable and

repeatable IBR process has resulted in inconsistent execution across the Air Force. This guide is a metrics

based approach designed to:

Standardize the rigor that is required in an IBR

Increase program performance through a standard IBR process

Be able to compare IBRs results between programs

Provide cost effective tools to aid the process

This document provides a standardized process for planning and conducting IBRs across the Air Force

enterprise. It establishes a disciplined approach to identifying and quantifying the risks and opportunities

inherent in Contractor performance plans and aligns the IBR process with established Air Force and

Department of Defense instructions and guidance.

Perhaps more importantly, this updated process and new instruction aims to streamline, simplify, and

focus the program team on building a meaningful, achievable, and truly integrated baseline that helps

identify program execution risks and opportunities early enough to enable effective course corrections.

The new instruction promotes Government and industry working collaboratively from the pre-contract

award phase through IBR closeout. This instruction also supersedes existing Air Force IBR team

handbooks and guides and provides sample worksheets, checklists, questionnaires, and other tools that

help the Government and Contractor PMO through all phases of the IBR process.

Note: Hereafter within this document, the term PMO refers to the joint Government and

Contractor program team. If a process or step specifically addresses a Government or

Contractor role or responsibility, then “Government” or “Contractor” is used

accordingly.

The benefits of this IBR process include:

A common understanding and quantification of program risks and opportunities

Integrated Baseline Review Process

6 Version 3.0, 20 September 2012

Early management insight into baseline planning assumptions and resources

Comparison of expectations, allowing differences to be addressed early in the planning phase

Correction of baseline planning errors and omissions

In-depth understanding of developing performance variances

Improved early warning of significant risks

Resource targeting to address challenges and handle risks

Mutual commitment by the Government and contractor PMOs to manage to the baseline

1.3. Current IBR Guidance and Process Documentation

IBRs are normally limited to cost and incentive contracts with an Earned Value Management

requirement. Department of Defense acquisition policy 48 CFR Parts 252 and 234, as flowed down to

Department of Defense Instruction (DoDI) 5000.02, Defense Federal Acquisition Regulation (DFAR)

subpart 234.203(2) and DFAR clause 252.234-7002 (May 2011) require conducting IBRs on all cost and

incentive contracts valued at $20M or greater. An IBR is also required on any subcontract, intra-

Government work agreement, or other agreement that meets or exceeds the $20M threshold for Earned

Value Management (EVM) implementation. The Integrated Program Management Report (IPMR) DID,

DI-MGMT-81861 also lists the requirements for the performance of an IBR.

The IBR is not a one-time event or a single-point review. The program team conducts additional IBRs

when internal or external events significantly change a project’s baseline. These types of events include

changes to a project’s contract requirements or content, funding perturbations, or when a major milestone

occurs, such as moving from development to production. Additionally, IBRs are conducted whenever an

Over Target Baseline (OTB) or Over Target Schedule (OTS) is implemented.

The AF IBR Process Document presents an incremental approach to conducting the IBR. The DoD

Earned Value Management Implementation Guide (EVMIG), October 2006 and NDIA IBR Guide,

September 2010 are two current documents that reflect a slightly different approach to conducting an IBR

from that proposed in this document. Both are guidance rather than compliance documents. Within the

Air Force, acquisition organizations should use this document as the principle guidance for conducting an

IBR.

Integrated Baseline Review Process

7 Version 3.0, 20 September 2012

2. Introduction to Revised Air Force Process

This section of the document provides an overview of the incremental IBR process.

2.1. Objectives of the Revised Air Force Process

From an acquisition oversight perspective, the objective of this process is to provide a standardized

approach for conducting IBRs. IBRs are infrequently performed activities. As a result, few personnel

have experience with multiple IBRs. Using a standardized process across the Air Force acquisition

community will overcome that limited experience and reduce the amount of training required.

Similarly, the acquisition oversight organizations have a need to analyze IBR results and compare them

with program progress to determine the impact of IBRs. This is not possible with each acquisition

organization performing IBRs differently.

From a Program Management Office (PMO) perspective, the objective is to get an executable

Performance Measurement Baseline (PMB) as soon as possible. A related objective is to ensure mutual

contractor / Government understanding of all the risks associated with the PMB. From a contractor’s

perspective, the joint collaborative efforts help the contractor understand the customer expectations better.

It also provides the contractor’s customer with insight to the methods and processes used to develop the

product.

A great portion of the IBR process is devoted to reviewing and evaluating data. Terms

used for data merit explanation. The term “artifact” is used to describe some information

that may or may not be a standalone document. One example of an artifact is the

program critical path that is displayed in the IMS. The term “document” refers to

elements of data that are standalone entities. The Contract Performance Report (CPR) or

Integrated Program Management Report (IPMR) are documents. Several other terms for

data are used in this document. The term “deliverable” or “Contract Deliverable

Requirements List (CDRL) item” refers to data elements formally delivered from

contractor to Government as specified in the contract. The IMS is an example of a CDRL

item or deliverable. The final term “data call items” are items that are not deliverables

but needed for the IBR process. The Control Account Plan (CAP) is an example of a data

call item.

Some artifacts are assessed for quality in the IBR process. Others are vetted by other

processes (such as contract negotiations) are source documents for integration traces.

Some artifacts may be evaluated for quality (format and content) and evaluated as part of

integration traces to ensure the PMB is consistent across the family of program

documents. Some artifacts such as the Work Authorization Documents (WAD) are

available for use, as appropriate, in CAM discussions.

2.2. Incremental / Phased Approach

In a traditional IBR, some considered the IBR Exit Briefing the key deliverable of the IBR, but here the

IBR Exit Briefing is merely the culmination of a process. The process focuses on the quality and

integration of program documentation via a series of reviews or meetings that precede the IBR Exit

Briefing. These reviews are conducted by IBR phase and help the Government and contractor teams

Integrated Baseline Review Process

8 Version 3.0, 20 September 2012

concentrate on the quality of contractual documentation and the five risk areas in a sequential manner.

The figure below (with notional timing) reflects the two phases of the IBR process.

IBR Phases

From the time the IBR process begins and through IBR Closeout, the team identifies, addresses, and

tracks actions. The IBR process also entails an assessment based on data call readiness; that is, the ability

of the Government and contractor teams to provide the necessary data in an adequate format (see section

3.3.1). Rather than wait for the interview portion later in the IBR process to find too many unanswered

questions or integration issues, the IBR process provides opportunity to address PMB quality and

integration issues early and throughout the process.

Note: The term “incremental IBR” is often used to reflect a series of IBRs at each point

where a significant amount of detailed planning occurs. As an example, an IBR is

conducted on the path from contract award to Preliminary Design Review (PDR).

Another IBR is conducted for the path from PDR to Critical Design Review (CDR). The

“incremental” nature of the process described herein is that the Government and

contractor teams are jointly participating / evolving the PMB. This process supports

incremental IBRs as described above.

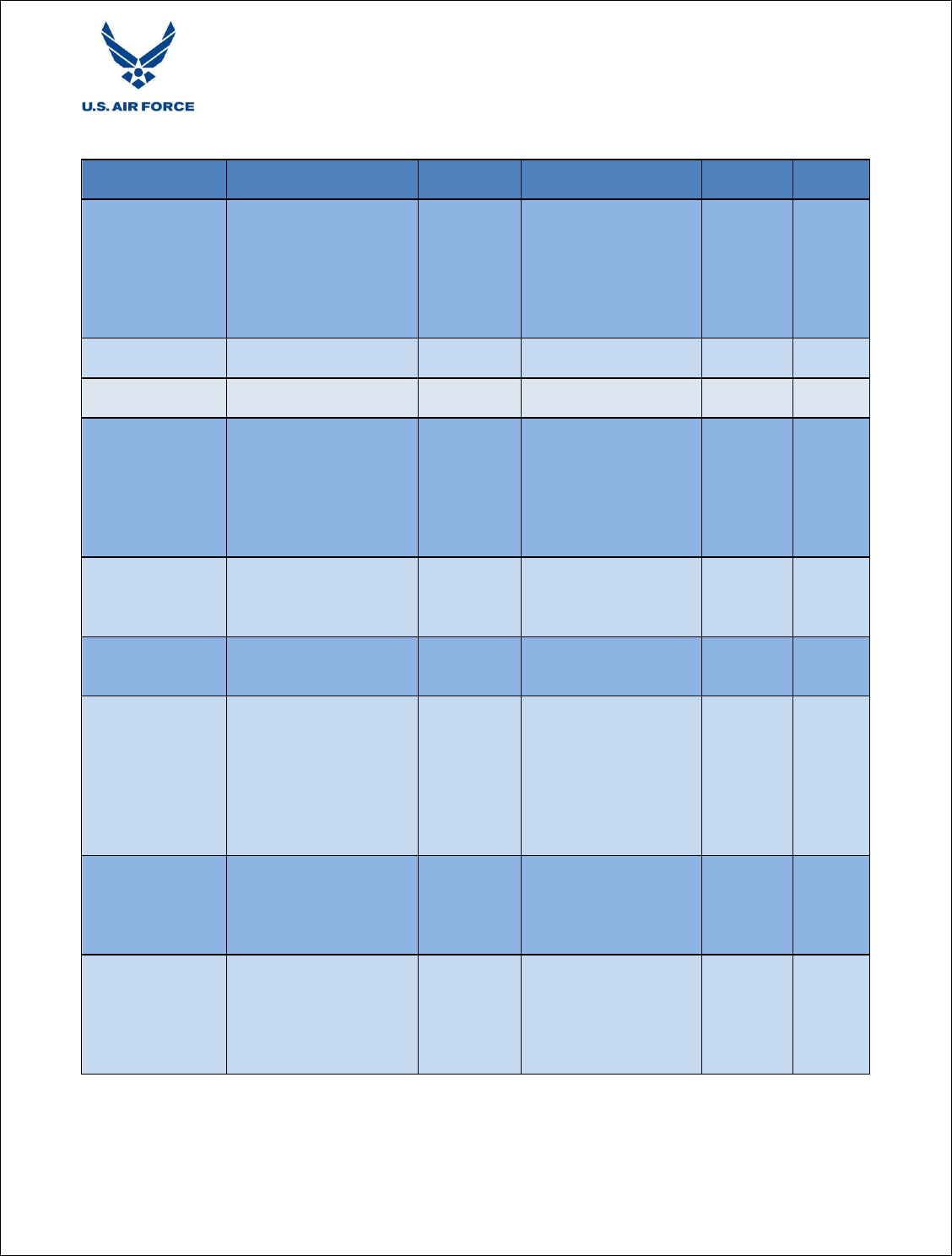

The table below lists the major activities of this IBR Process and notional timing aspects, depicted as

calendar days in relation to Contract Award (CA).

Integrated Baseline Review Process

9 Version 3.0, 20 September 2012

Activity

Participants

Timing

Initial Meeting to Kick-off the IBR process

Government Agencies

CA-60d

Earned Value Management (EVM) Basics and Analysis

Training

Government Agencies

CA-30d

Contract Award

CA

Post Award Conference / Joint IBR Expectations to

introduce the IBR process

Government and

contractor

CA+15d

Notification / Call for data to determine preliminary

schedule and data call list

Government Agencies

CA+30d

IBR Process Walkthrough with Joint IBR Team to finalize

IBR schedule, artifact list and integration checks

Government and

contractor

CA+45d

Assign teams for each topic area

Government and

contractor

CA+45d

Begin Artifact Quality Assessments

Government and

contractor

CA+60d

Begin Data Integration Assessments

Government and

contractor

CA+70d

Begin Periodic Quality and Integration Progress Meetings

Government and

contractor

CA+77d

IBR Readiness Review to initiate CAM and Business

Office Discussions

Government and

contractor

CA+100d

IBR CAM Discussion Training

Government and

contractor

CA+105d

Begin CAM and Business Office discussions

Government and

contractor

CA+110d

IBR Readiness Decision to conduct IBR Exit Briefing

Government and

contractor

CA+130d

Formal conduct of the IBR Exit Briefing

Government and

Contractor

CA+145d

Issue coordinated IBR Report

Government and

contractor

CA+155d

Integrated Baseline Review Process

10 Version 3.0, 20 September 2012

Activity

Participants

Timing

Complete critical open action items and declare IBR

complete

contractor

CA+155 to

CA+180

Major IBR Activities

The chart below shows the relationship of the various IBR activities.

Form Govt

IBR Team

Govt IBR

Team

Orientation

Initial

Supplier

Interface

Meeting

Form Joint

IBR Team

Joint IBR

Team

Training

(Process)

Define

Artifact

Quality &

Integration

Traces

IPT # 1

Artifact

Quality &

Traces

IPT # 2

Artifact

Quality &

Traces

IPT # 3

Artifact

Quality &

Traces

IPT #N

Artifact

Quality &

Traces

IBR Data

Readiness

Review

Joint IBR

Team

Training

(Discussion)

Contractor

BMO

Discussion

CAM

Discussion

Session # 1

CAM

Discussion

Session # 2

CAM

Discussion

Session # n

Phase II

Status

Review

Data Improvement Efforts

CAM Improvement Efforts

IBR Exit

Briefing

Top Level IBR Process Flow

Integrated Baseline Review Process

11 Version 3.0, 20 September 2012

2.3. Benefits of Revised Air Force Process

The table below compares the differences between the current single event IBR and an incremental IBR

process.

Traditional IBR Process

Transformed IBR Process

Focused on EVM / Compliance

Focused on program execution risks and

opportunities

Cumbersome storyboards to depict integration

Risk topic areas with standard artifacts

Interviews with varying questions

Discussions based on risk areas

CAM Interviews including data traces

Focus on control account risks and

performance measurement baseline

Multiple data providers

One Government and one contractor focal

point for each artifact and integration trace

Administratively burdensome reviews and

interviews

Fewer discussions with standard formats and

clear expectations

Inconsistent CAM Interview questions / approach

Consistent discussion focused on risk in the

PMB

Administratively burdensome post-reviews

(Discrepancy Reports (DRs), Corrective Action

Requests (CAR), Corrective Action Plans (CAPs)

Action Tracker Report with specific actions

by function

One-size-fits all

Flexible / tailorable IBR depending on phase,

size, complexity of program

Inconsistent application of the process; each center

has its own process

Consistent, clear expectations and standard

methodologies

Evaluation criteria not clear

Transparent, clearly defined guidelines

IBR is a short duration of intense activities

IBR Exit Briefing is anti-climactic; merely a

final assessment step

IBR results inconclusive or incomplete

IBR Go / No-Go assures when IBR is

conducted the results are meaningful

Government-only Assessment

Joint contractor / Government Assessment

Traditional versus Transformed IBR Process

Integrated Baseline Review Process

12 Version 3.0, 20 September 2012

3. Sequential Description of IBR Activities

This section walks through the IBR process chronologically beginning with a Request for Proposal (RFP)

preparation and culminating with the declaration of IBR completion.

3.1. RFP Preparation / Pre-Award Activities

RFP Preparation and Pre-Award activities begin when the Government identifies a requirement for a

Request for Proposal or Quote (RFP or RFQ) solicitation with an EVM requirement. The incremental

nature of this IBR proceeds more smoothly if RFP documents reflect this process. Tailor sections of the

RFP to reflect the IBR conduct. Some programs find it helpful to write a short concept of operations

(CONOPS) about how they execute the contract from an EVM perspective. That document helps to

identify contract documents tailored for the IBR and program execution. The chart below shows potential

RFP tailoring for the IBR.

Tailor IBR

Event in IMP

Tailor IPMR /

IMS CDRL /

DID for

CONOPS

Tailor SOW for

IBR process &

CONOPS

Tailor IPMR /

CPR CDRL /

DID for

CONOPS

Release RFP

Respond to

bidder

questions on

IBR process

Evaluate

Proposal

IBR Process

Document

Develop EVM

Program

Execution

CONOPS

Pre-award Activities

Integrated Baseline Review Process

13 Version 3.0, 20 September 2012

3.1.1. RFP Document Tailoring for IBR

The Statement of Work (SOW) or Performance Work Statement (PWS) should include a description of

the incremental nature of the IBR process and refer to the Air Force IBR Process Document for guidance.

Consider adding SOW wording to require the contractor to support requests for non-CDRL items as part

of the IBR data call.

Note: Statement of Work (SOW) and Performance Work Statement (PWS) are two terms

used to define the scope of work for the contractor. Where SOW is used in the document

it refers to SOW or PWS as applicable.

The contract deliverables should be consistent with the incremental schedule of the IBR. The delivery

schedule of PMB defining documents (IMS, CPR, and IPMR) should be consistent with the preliminary

IBR schedule. The IMS is especially important as it reflects the contractual SOW and is the primary

instrument used to determine project status and IMS, CPR, and IPMR CDRL reporting. The Air Force

maintains processes and tools to assess the contractor IMS and provide essential feedback to improve this

critical deliverable. Granularity requirements (i.e. activity duration limits) should be included in the SOW

or tailored Data Item Description to ensure that the Government gets the visibility needed to manage the

program. Consider making limits on rolling wave planning, such as requiring a minimum of the first year

from establishment of the PMB to be detail planned (no planning packages) or detailed planning to a

specified program event.

On large complex programs, developing the IMS may be a driving document for the IBR schedule. It is

common to take up to four months after contract award to develop a credible IMS. Compare the draft IBR

schedule with the deliverables schedule proposed in the RFP to ensure they allow for an incremental IBR.

When an Integrated Master Plan (IMP) is included in the RFP, adjust the Events, Accomplishments and

Criteria to reflect this IBR process. A sample template for the IBR Program Event is contained in Section

6.2.

Tailor the CPR / IPMR Data Item Description (DID) to ensure the CPR / IPMR provides the appropriate

level of visibility. Specify the level of the Work Breakdown Structure (WBS) that the contractor reports

on the CPR / IPMR. A review of MIL-STD–881 helps the Government PMO make this determination as

contractors use this as guidance when preparing the Contract Work Breakdown Structure (CWBS).

3.1.2. IBR Process in Blended Contract Environments

There may be cases where EVM is not flowed from the prime contractor to a number of suppliers. This is

most common where suppliers are providing goods or services at a Firm Fixed Price. The contract EVM

clauses in the contract specify when EVM is to be flowed down to suppliers. Remember to flow EVM

down to any non-US subcontractors, if EVM is required per the contract clauses.

When there is no flow down of EVM, a supplier does not have to support an IBR unless otherwise

specified in a contract clause or SOW. In this case, it is the prime contractor’s responsibility to get the

supplier information necessary to establish the PMB and identify any risks associated with executing to

that baseline. During the IBR, the prime contractor CAM that has responsibility for that supplier must be

able to address each of the risk area topics pertaining to that supplier. This means the CAM must be able

Integrated Baseline Review Process

14 Version 3.0, 20 September 2012

to explain the supplier’s role in the program describing related items in technical, schedule, resources,

costs, and management processes for all risks.

Large portions of the PMB may be provided by suppliers without an EVM requirement. Add wording to

the SOW similar to that in the paragraph above and specify the visibility of supplier information required

in the IMS.

The term “blended contracts” can mean a combination of FFP and EV applicable Contract Line Item

Numbers CLINs in a contract or situations where subcontractors are not required to use EVM in a

contract where the prime is required to use EVM. In this document, the term is used relative to CLINs. In

a blended contract environment where a large percentage of the contract is Firm Fixed Price (FFP)

through suppliers, the use of multiple CLINs can help provide the granularity needed for the EVM portion

of the contract. As an example, consider a case where 50% of the contract effort is performed by a single

supplier with a FFP purchase agreement. On the CPR, this supplier’s Budgeted Cost of Work Performed

(BCWP) and Actual Cost of Work Performed (ACWP) would always be equal and could distort program

level Cost Performance Index (CPI) calculations. Having this supplier’s work scope as a separate CLIN

and having separate CPRs or IPMRs for different CLINs would prevent this distortion.

See Section 4.2 Scoping the Performance Measurement Baseline for additional discussion of this subject.

3.1.3. Definition of Roles and Responsibilities

Definitions of roles and responsibilities for EVM and the IBR should be determined prior to award. The

PMO team should understand the roles and responsibilities defined below. After award, communicate the

roles and responsibilities to the contractor through the SOW or Post Award Conferences, Program Startup

Workshops, or other meetings. Several organization and individual roles and responsibilities are defined

below.

3.1.3.1. IBR Roles (Government and contractor )

Program Managers (PM) (Government and contractor) The Government PM owns and leads the IBR

process. The contractor PM is a co-leader of the IBR process but final decision authority rests with the

Government PM. The PMs ensure the program team gives necessary priority and dedication to prepare

for, conduct, and support follow-up for each activity. They help facilitate the various sessions and reviews

by requiring attendance, holding the team to the rules of engagement, and adhering to agendas.

Government and contractor PMs assign the panel of key program team members and external experts (if

required) who help conduct the IBR and document the formal IBR results / assessment.

IBR Integrators (Government and contractor) The Government PM selects the Government IBR

Integrator with the following characteristics:

The Integrators have a good perspective of integration (able to see the big picture of how

things fit together, how systems, subsystems, data, work, and the teams integrate).

A strong program knowledge and cost / schedule background is essential.

Technical knowledge is a plus.

The contractor PM selects the contractor IBR Integrator. These pivotal roles require individuals who are

organized, detail-oriented, objective, and respected by the program team. The two IBR Integrators remain

in their roles to provide continuity and consistency throughout the IBR process as much as practical. The

two IBR Integrators run the various meetings. This includes handling logistics, sending invitations,

Integrated Baseline Review Process

15 Version 3.0, 20 September 2012

conducting the meetings, and managing follow-up tasks. They ensure the CAMs understand their IBR

roles and responsibilities and facilitate guest speakers that may conduct training. The Government IBR

Integrator maintains the master data set for the IBR. The IBR Integrators compile actions, risks,

opportunities, and questions as submitted.

Artifact Experts (Government and contractor) PMs and the IBR Integrators assign one Government

and one contractor expert for each artifact. These individuals are experts or expected to become experts

for their respective artifacts. They perform data trace presentations and help guide the team to evaluate

integration and quality aspects of the artifacts. They explore and report on the artifact deficiencies with

objectivity, frankness, and an aim toward improving the artifact for the betterment of the program. These

identified deficiencies may develop into actions. Contractor Artifact Experts help CAMs determine the

level of artifact integration within their Control Account documents.

See Section 4.1, Organizing the Air Force IBR Team.

3.1.3.2. Defense Contract Management Agency (DCMA)Role

DCMA participates as an invited member of the Government IBR team. Specific roles and

responsibilities are defined based on availability of skills and experience. DCMA participation gives them

a better understanding of the program and the PMB. It also makes clear that the PMO priority is

establishing a credible PMB and understanding the risks rather than EVMS compliance. DCMA

participation also allows the contractor to provide additional insight into contractor methods and

procedures.

The origin of the IBR is in Earned Value Management. The IBR requirement began in 1993 based on the

application of EVM in the contract. This situation sometimes causes confusion in document quality

evaluations and identification of EVMS compliance issues.

While many of the risk areas touch EVM artifacts, the focus of this IBR process is not EVMS

compliance. Review artifacts considered EVMS documents for quality with an emphasis on the complete

and accurate identification of the PMB and the risks associated with meeting the program objectives.

If, in the performance of the AF IBR Process, significant potential EVMS compliance discrepancies are

discovered, the program office can document these potential discrepancies as risks in the appropriate risk

categories and provide the observations to DCMA. DCMA can further investigate the matter as part of

their surveillance role.

3.1.3.3. Supplier Roles in an IBR

As EVM flows down from the prime contractor to subcontractors / suppliers (hereafter referred to as

suppliers), the requirement for Supplier IBRs is established. That requirement may be satisfied in two

ways. The prime contractor and Government PM may agree to have a consolidated IBR and the supplier’s

portion of the PMB is addressed as part of the overall program. Another option is to conduct separate

Supplier IBRs led by the prime contractor PM with participation from the Government PMO. This section

discusses both approaches.

The Government PM and the prime contractor may agree to have a consolidated IBR. This is often the

choice when the prime contractor is using the “One Team” concept. Under this concept, suppliers CAMs

perform at the same level as prime contractor CAMs. The contractor team appears as one contract with

one set of EVMS deliverables to the Government Program Office. This concept is frequently used when

the suppliers are business units of the prime contractor.

Integrated Baseline Review Process

16 Version 3.0, 20 September 2012

Under the “One Team” concept, the supplier’s portions are awarded almost simultaneously with the prime

award, enabling the incremental approach (maturing artifacts, integration and risk identification) of this

IBR process to succeed. This IBR process focuses on the PMB and the risks associated with executing the

program. EVMS compliance down through the suppliers is the responsibility of DCMA, alleviating this

duty from the IBR team. As a result, under this “One Team” approach the number of artifacts should not

be significantly increased. For example, a supplier’s effort can normally be identified as a specific section

of the prime contracts SOW and WBS.

When EVM is flowed down to suppliers, the Prime contractor and Government PM may elect to conduct

separate IBRs for the suppliers instead of a consolidated IBR. In this approach, the suppliers deliver a set

of EVMS artifacts to the prime who, after consolidating the data into the prime’s EVMS artifacts,

forwards the supplier artifacts to the Government.

From a risk management perspective, it is desirable to complete any separate supplier IBRs and Integrated

Risk Assessments (IRA), if applicable, before finalizing the prime contract IBR. That way all risks

identified at the lower level can be flowed into the top level IBR. In some cases, the supplier’s contracts

may not be awarded simultaneously with the prime contract. If there is a delay of several months, it may

not be possible to execute the supplier IBR using the approach described in this process document. It may

be necessary, due to timing, to revert to the traditional IBR approach (last minute data call and

compressed IBR activities) for suppliers.

When conducting the supplier IBR is not possible within the period of the Prime contractor’s IBR, then

the risks associated with that supplier’s PMB is addressed in the Prime contractor IBR. As a minimum,

the inability to perform the supplier IBR prior to the completion of the prime IBR should be reflected in

each applicable risk area (schedule, cost, technical, resources, and management processes). See also

section 4.2; Scoping the Performance Measurement Baseline.

3.2. Immediate Post Award Activities

Having completed the pre-award activities, the next efforts are associated with jointly preparing the plans

and assigning individual roles and responsibilities to conduct the IBR.

3.2.1. IBR Process Introduction and Detailed Planning

Soon (fifteen to thirty days) after contract award, the Government PMO team should meet with the

contractor team to introduce the IBR process in detail and plan the execution of the IBR. The introduction

should address roles and responsibilities, the IBR phases, artifact selection / integration, and CAM

selection criteria for Phase II discussions. If formal notification of the IBR is appropriate, a notification

letter may be prepared. Section 6.3 contains a sample notification letter. If there is a face-to-face meeting

such as at a Program Startup Workshop, joint agreement may be reached on the following topics.

3.2.1.1. Artifact Identification

Section 6.4 of this document contains a Sample Artifact List. This is a list of the most common artifacts

used in IBRs across a variety of acquisition phases. Consider including artifacts from the artifacts list, for

the IBR if they define the PMB or flow the PMB down to the execution level (control account). The

program management triangle with technical, schedule, and cost sides is a good representation of the

PMB.

Integrated Baseline Review Process

17 Version 3.0, 20 September 2012

The technical side represents the requirements for the product or services created. The schedule side

represents the activities, durations, and sequence of work. The cost (resource) side represents the various

assets used to create the product or services. The artifacts selected represent all three sides. The Joint IBR

team should review this sample artifact list and decide which artifacts are applicable for this IBR.

Following that step, they should further identify which documents are already “vetted” and not subject to

quality evaluation. They should also determine which artifacts are deliverables (CDRL items) and which

are non-CDRLs with delivery addressed in the data call list.

3.2.1.2. Data Call Identification

The artifacts examined for the IBR include deliverables (CDRL items), as well as non-CDRL items such

as control account plans, work authorizations, and other EVMS data elements. These non-CDRL items

are defined in the Sample Artifact List. Take time to ensure that all parties understand these data call

items, as contractor s and EVMS software often use different names and formats for these items. During

the review of the data call items, the team should note when these items are available. For example, work

authorization documents are often not finalized until after the baseline has been set.

3.2.1.3. Integration with Other Program Events

Depending upon the acquisition phase, the program structure and sequence of events, other program

events can be folded into the IBR, reducing or eliminating duplicate efforts. One example is the Systems

Requirements Review (SRR). This review duplicates some portions of the review of technical artifacts

and the trace of system requirements from an Initial Capabilities Document (ICD) or Capability

Development Document (CDD) to System Requirement Document (SRD) or System Specification (SS).

Another example is the IRA. Results from an IRA may streamline the required SRA activities. The IBR

team should consider these other events in the scheduling of the IBR activities.

3.2.1.4. IBR Schedule Preparation

As the delivery dates for the CDRL and non-CDRL artifacts are finalized, the detailed IBR schedule can

be completed. A template in Microsoft Project is included in Section 6.1. A copy of the file in Microsoft

Project format is available from SAF/AQXC.

When preparing the schedule, the joint IBR team should include schedule margin in the plan so that

completion of the IBR within 180 days of contract award is possible. The schedule should be statused

weekly and available to all members of the IBR team.

Integrated Baseline Review Process

18 Version 3.0, 20 September 2012

3.2.2. Joint IBR Team Assignment of Responsibilities

After agreeing on the IBR schedule, individuals are assigned specific roles and responsibilities. This

includes the Artifact Experts, as well as risk area leaders.

The EVMIG documents five risk areas for identifying IBR risks (technical, cost, schedule, resources, and

management processes). The IBR process in the EVMIG is organized around those risk areas so that

integration teams are formed for each of those five areas. Today, most major acquisition programs use the

IPT approach in both the Government and contractor organizations. In some cases, the Government and

contractor IPT align exactly and joint IPTs are easily formed. The five risk areas do not align perfectly to

the IPT structures and may create some confusion in the initial organization of the IBR process. The Joint

IBR Team may elect to align responsibilities by IPTs, but retain reporting by the EVMIG risk areas.

3.3. IBR Phase I Artifact Quality and Data Integration

After the IBR team is organized and the IBR schedule developed, Phase I begins. Phase I is dedicated to

evaluating artifact quality and assessing data integration. Initial steps define the standards for measuring

the artifact quality and data integration. The artifacts and data integration points are then assigned to

teams and evaluated.

3.3.1. Jointly Defining Artifact Quality

During the initial post award activities, artifacts are identified for quality evaluation. The next step is joint

determination of the standard or quality proofs for each artifact. A number of quality standards may be

established for the artifacts:

Adequate quality of key program documentation may be defined as complying with contractual

CDRL requirements, as well as any DIDs referenced in the applicable CDRL.

Where a CDRL does not exist, the quality of a document can be established based on the

informed and experienced judgment of the document’s Artifact Experts, considering

characteristics such as document clarity, completeness, and conformance to common industry

standards.

Establishing the quality of a document should take into account if it has sufficient data elements

for the standard cross-documentation data traces defined in this document.

Section 6.4 contains a list of common artifacts, their most appropriate risk or IPT category and a narrative

description of desired artifact quality. The IBR team should review and update this list for their particular

program. It is extremely important that the definition of artifact quality standards are agreed to by both

contractor and Government IBR teams. Taking the time to reach consensus on these proofs early in the

process avoids downstream confusion and helps keep the process on track.

In addition to “deliverable” artifacts, the IBR also requires supporting documents. These documents are

normally requested through a data call letter. To avoid incorrect interpretations, the data call letter for

these other artifacts should contain enough definition that the contractor can readily determine the format,

content, and acceptability of each requested data item. A sample data call request is contained in Section

6.5.

Document quality should receive one of these scores: (1) Adequate Quality: Quality criteria are met.

There are no open action items regarding quality aspects or (2) Inadequate Quality: Quality criteria are

Integrated Baseline Review Process

19 Version 3.0, 20 September 2012

not met. Artifacts judged to have inadequate quality should have associated action items. The status and

scoring of artifacts can be maintained by adding a scoring column to the artifact list spreadsheet.

It is important to maintain the current artifact scoring during the incremental IBR process. As corrective

actions are taken, an artifact status may move from “Inadequate” to “Adequate.” The Government IBR

Integrator should maintain the current status / scoring of all artifacts.

3.3.2. Jointly Defining the Data Integration Proof Statements

Data traces verify the integrity of the baseline from Government source documentation through the

contractor baseline at all levels. Data traces may flow from top-level program requirement documents,

such as the CDD, through to control account work authorizations. Data integration proof statements

explain the order or flow of documentation, the purpose of the data trace, the scope, risk area or

applicable IPT, and the proofs. The relationship of program documentation may vary between programs.

The IBR teams must review and agree upon these data relationships to establish an accurate standard to

score the existing documentation through data traces. Section 6.6 contains a sample template reflecting

these relationships.

Data traces are scored as “Adequate” or “Inadequate.” Traces rated as inadequate should have

corresponding action items to restore the artifacts to an adequate rating. Common discoveries during

traces include:

Scope of work in SOW not assigned to any control account

Differences in work scope between SOW, WBS Dictionary, or Work Authorization

Work scheduled inconsistently to the time-phased budget for that work

Section 6.6 contains a list of common documentation traces. The IBR Team should use this table as a

starting point and refine it to match the particulars of their program.

3.3.3. Assigning and Evaluating Artifact Quality and Data Integration

After artifact quality standards and data integration proof statements have been finalized, the artifacts may

be assigned to teams for evaluation. Teams should periodically report evaluation status to the IBR

Integrator.

The AF IBR Process touches artifacts at least twice. The first review of an artifact is an assessment of its

quality. If the artifact is a contract deliverable, the contract including SOW, CDRL, and DID outline the

requirements for the artifact. Normally, an artifact that meets these requirements is considered to have

satisfactory quality. Sometimes during an IBR, additional standards may be established for an artifact,

such as meeting the DCMA 14 Point Assessment Criteria for an IMS. The higher standard may be

imposed to ensure that related activities like the Schedule Risk Assessment (SRA) yields credible results.

As the teams evaluate the artifact quality and data integration, they should record any anomalies. This is

best accomplished with screenshots accompanied by a written description of the anomaly. This helps the

artifact owner to understand what corrections are required. When one or more anomalies represent a

significant update or correction that needs to be made, an action item should be prepared and forwarded to

the IBR Integrator. The report should state clearly, what standard or proof is not met, provide an example,

and include the recommended corrective action. The IBR Integrator keeps a master list of the action

items. If the anomaly is sufficiently severe, turn the anomaly into a risk for determining the severity of

Integrated Baseline Review Process

20 Version 3.0, 20 September 2012

consequence. Since the evaluation team is composed of both contractor and Government personnel,

feedback from the evaluation to the organization responsible for correcting the artifact should be

immediate.

There may be a direct relationship between artifact quality and data integration. If artifact quality is not

acceptable and the trace team is unaware of the documented quality issues, the trace may not be accurate

or it may require repeating the trace after artifact revision. Since different personnel may be assessing an

artifact at the same time (data quality and integration trace), it is recommended that the IBR Integrator

hold a weekly status review for all IBR team members.

3.3.4. Readiness Review for Transition to Phase II

As artifact quality and data integration evaluations are concluding, the team should be preparing for a

Readiness Review. The purpose of the Readiness Review is to present the status of Phase I of the IBR to

both Government and contractor PMs and gain approval to begin Phase II, the CAM and Business Office

discussions.

The Readiness Review should include a review of all artifact quality and data integration checks. The

review should also include a review of all action items, both those closed as well as any items still open.

Section 6.7 contains a sample Readiness Review briefing outline.

Some artifacts may not be adequate by the time of the Readiness Review. In this case, the IBR leadership

may elect to continue artifact improvements and initiate the second phase of the IBR (CAM Discussions)

or delay Phase II. This decision should consider whether the artifacts condition would affect or invalidate

the discussions of control account details.

3.4. IBR Phase II – CAM and Business Office Discussions

After receiving a go-ahead from the IBR leadership, the second phase of the IBR process begins. During

this phase, Business Office and CAM discussions are conducted. Business Office discussions focus on the

management processes used by the contractor to implement and manage the program using Earned Value.

The management processes discussed should include those pertaining to CAM roles and responsibilities.

There are two advantages to having separate Business Office discussions. First, the processes and

procedures provide a background for the CAM discussions. Second, the discussions do not need to be

repeated during the CAM discussions, allowing the focus to be on control account-related risks. During

the Business Office discussions, the contractor explains any program unique EVMS procedures, as well

as a review of the information systems / software used for EVM.

3.4.1. Business Office Discussion Topics

The following are potential topics for Business Office discussions:

Work authorization process and common documentation

Contract Budget Base (CBB) Log and the Management Reserve (MR) /Undistributed Budget

(UB) guidance

How routine EVM information is developed and maintained

Introduction to the IMS, including integration of suppliers / contractor data

How subcontracts /suppliers are managed

Integrated Baseline Review Process

21 Version 3.0, 20 September 2012

Role of Program Control and CAM

How the PMB Status is maintained

3.4.2. Control Account / CAM Selection Criteria

The CAM selection criteria are discussed when the IBR process is originally introduced to the contractor.

At this point, the criteria are applied in the selection of control accounts and CAMs for discussions. The

selection criteria recommended are high dollar value control accounts, control accounts on the critical

path, and control accounts associated with high-risk events. A combination of the Responsibility

Assignment Matrix (RAM), IMS, and Risk Register are used for the CAM selection process. In addition,

a copy of the dollarized RAM will assist with the control account selection.

3.4.2.1. Percent of PMB Sampled

The EVMIG recommends selection of at least 80% of the PMB value for review. The sampling for an

IBR has been interpreted in various ways by IBR teams in the past. Some teams use PMB dollars to

calculate the recommended percentage for sampling. Others use the number of control accounts. Some

use direct labor hours. The guidance in the EVMIG is not specific on how the percentage should be

calculated. Additionally, the EVMIG implies that FFP subcontracts and FFP material items may be

excluded from selection. Control Accounts that are 100 percent LOE and properly removed from the

critical or driving paths in the IMS are also candidates for exclusion.

CAMs are often assigned a number of control accounts. Individual CAM discussions tend to focus on the

high or medium-risk control accounts to get a satisfactory amount of detail during the limited time

available for discussions. This raises the question whether credit should be taken for all CAM control

accounts when only one or two control accounts are discussed.

This IBR process recommends the following steps to select control accounts (that then determine CAMs)

for discussion sessions.

Identify and select all control accounts with a program level of medium or high risk, based on

probability multiplied by consequence.

Identify and select all control accounts on the current program critical path.

Remove the previously selected control accounts from consideration and select the next five to

ten highest dollar value control accounts.

Remove all the previously selected control accounts from consideration and have each

Government Program IPT select one control account from the remaining list that concerns them

the most based on the five IBR risk areas (technical, cost, schedule, resources, and management

processes).

The combination of the incremental IBR process with a quality review of artifacts, and document traces

provides the IBR team more insight to potential problems than a single event IBR. This additional insight

reduces the need to “cover the waterfront” with a high percentage of control accounts sampled.

3.4.3. Scheduling and Conducting Discussions

Depending on the program size, complexity, number of CAMs, number of control accounts, and risks, the

CAM discussions are often conducted using one of these options:

Integrated Baseline Review Process

22 Version 3.0, 20 September 2012

Option 1: Individual CAM Discussions. Present CAMs one at a time (optionally including Government

and contractor IPTs and IBR stakeholders) to discuss the CAM's understanding of their area of

responsibility and to determine the risk levels associated with accomplishing the work scope of their

control accounts within cost and schedule. Direct the questions to individual CAMs. The CAMs should

demonstrate control account ownership, adequate span of control, and ability to understand and act on the

data to establish and execute the control account baseline. In some cases, allow CAMs to have support

staff such as planners, schedulers, or analysts from other disciplines (e.g., finance, earned value,

scheduling, or supply chain management) in attendance. CAM support staff may help answer some

questions but the CAM must demonstrate overall ownership and understanding of their control accounts.

Option 2: Round Table Discussions. Round table discussions include a gathering of a group of CAMs

(optionally including Government and contractor IPTs and IBR stakeholders) to discuss the CAMs’

understanding of their area of responsibility and to determine the risk levels associated with

accomplishing the program baseline within cost and schedule. This grouping of CAMs is normally

focused on a common WBS element such as Guidance and Navigation. Questions are directed to

individual CAMs and the CAMs respond in this public forum. Specific CAM knowledge and the

interdependencies among control accounts are explored during these discussions. If the program has a

significantly large number of CAMs, round table discussions may be divided into manageable subgroups

to facilitate thorough discussions. Round table discussions may require more preparation time, including a

dry run or demonstration to ensure that the flow of artifacts, questions, and data traces transition smoothly

and that enough time is allocated for the sessions.

Option 3: Combination of Options 1 and 2, above. The PMs and IBR Integrators can use a mix of both

techniques to assess CAM knowledge, data integration, and data quality.

3.4.4. CAM Questions and Discussion Topics

The IBR incremental process with artifact quality evaluations, data integration traces, and a Business

Office discussion session enable CAM discussions to focus on identifying risks at the control account

level. Section 6.8 provides a number of questions that may be used during the discussion sessions.

Additionally, Section 6.9 provides a CAM checklist. This checklist is a detailed list addressing CAM

knowledge, skills, and responsibilities. It should be shared with the CAMs prior to discussion sessions

and may be used by interviewers to prompt questions.

Occasionally IBR teams may attempt to limit CAM discussions due to time constraints or lists of

questions. The purpose of the IBR is a joint understanding of all risks associated with executing the PMB.

CAM and Business Office discussions are allowed to “deep dive” into any area that appears to contain

risks to program execution. This point needs to be made clear prior to any discussions.

3.4.5. CAM Scoring and Recording of Discussions

The IBR CAM discussions are assessed using a three-point scale; 1 for high risk, 2 for medium risk, and 3

for low risk. The scoring captures systemic issues and be used for the overall IBR assessment. Those

areas found to be scored as inadequate (1 or 2) are documented as actions and monitored through IBR

close out. The scoring criteria are contained in Section 6.10 and contain potential ratings for each of the

five risk areas (technical, schedule, cost, resources, and management processes).

Following each CAM discussion, the IBR team should convene to review observations and reach

consensus regarding any action items. Ideally, this session takes place the same day as the discussion. The

Integrated Baseline Review Process

23 Version 3.0, 20 September 2012

Government IBR Integrator determines the level of collaboration based on the contractor’s participation

and demonstrated management approach.

If the IBR team discovers a serious concern, the IBR Integrator is responsible to ensure it is added to the

AF IBR Action Tracker and reported to the local DCMA or Defense Contract Audit Agency (DCAA) for

follow up.

A record of each CAM discussion should be prepared. The record should include:

General Information - CAM and Interviewers names, date of discussion, and control accounts

addressed

Summary by risk area

New / additional risks identified

Acton Items

Any Planned Follow Up

Summarize CAM discussion ratings at program level. The overall CAM discussions are rated Red,

Yellow, or Green for the categories of technical, schedule, cost, resources, and management processes.

For the overall scoring:

Green = 2.6 or greater

Yellow = less than 2.5 and greater than 2.0

Red = 1.9 or less

3.4.5.1. Translation of CAM discussions into Risks and Action Items

The CAM discussion records provide a mechanism to ensure that individually identified risks and action

items are included into the overall IBR history and scoring. Overall scoring of CAM discussions are

recorded for each of the risk topic areas. CAM discussion ratings that are less than Green are expected to

have program level recommendations associated with them. As an example, consider that the average

Schedule rating for all CAM discussions is red and the most cited comment is that CAMs cannot

determine if they are on the critical path. Then a general recommendation or action item for CAM training

in scheduling theory and reading schedule reports may be appropriate.

A sample form for summarizing CAM and Business Office discussions is contained at Section 6.11.

At the end of the CAM discussions, the IBR team should be able to determine if the assembled contractor

team can effectively manage at the control account level and ensure that all significant risks are identified.

3.5. IBR Exit Briefing and Follow-up

The culmination of the IBR is an Exit Briefing. If the incremental IBR process has been well-exercised,

all significant risks have been identified, action items prepared, and all critical action items completed.

Integrated Baseline Review Process

24 Version 3.0, 20 September 2012

3.5.1. Prerequisites for IBR Exit Briefing

The IBR incremental process places emphasis on completing action items by the two major review points

(Readiness Review and IBR Exit Briefing). Action items from quality assessments and integration traces

are expected to be completed by the Readiness Review Meeting (that initiates CAM discussions).

Similarly, action items from the CAM discussions are expected to be completed by the Exit Briefing. This

emphasis results in a minimum number of action items carried forward from the Exit Briefing.

3.5.2. IBR Exit Briefing Content

The IBR Event is a presentation led by the Government IBR Team that includes the following.

Top level review of the IBR results

Rating of risk areas

Review of open action items

Review of program-level risks

Review of the SRA for major milestones and program completion dates

Review of the IBR Event, Accomplishments, and Accomplishment Criteria, as depicted in the

IMP, as part of the Exit Briefing.

Section 6.13 contains a sample of an Exit Briefing.

3.5.3. PMB Approval – Criteria and Documentation

The IBR Program Event described in the IMP normally includes an Accomplishment Criteria for approval

of the PMB. This PMB approval is the Government acceptance that the PMB is well defined, achievable,

and the risks associated with its achievement understood by both contractor and Government teams.

The definition of the PMB is addressed by the artifact quality reviews and the data integration traces.

During Phase I of the IBR, the trace from requirements into detailed work packages that define the effort

necessary to satisfy all requirements is verified. In addition, achievability of the PMB is examined by

teams focusing on technical, schedule, cost, and resource aspects of the plan during this phase. The

understanding of risks is possible through artifact reviews, integration traces, and CAM discussions.

The PMB is the schedule for expenditure of resources at the control account level that reflects the scope

of the contract. The PMB is defined by several documents:

The IMS reflects the time sequencing of the contract scope

The Control Account Plan reflects the time phased budget by control account

The SOW reflects the scope of work to be performed

The requirements documents (e.g. SRD, Technical Requirements Document (TRD), Spec) reflect

the performance required of the product based on the scope of work

The IMP reflects the events, accomplishments, and criteria for the scope of work

Integrated Baseline Review Process

25 Version 3.0, 20 September 2012

Work Authorizations reflect the assignment of work to CAMs

Therefore, if there are discrepancies in the above documentation, the PMB may not be correctly defined.

If there are discrepancies in the definition between the documents, the flow from requirements to detailed

plans may not be correct. Phase I of the IBR process is focused on PMB definition, its completeness,

accuracy, and proper integration across the family of documents.

The decision for PMB approval will be made prior to the IBR Exit Briefing and the following questions

should be considered in determining if the PMB is adequately defined:

Is the entire scope of work included in the PMB?

Is the work sequenced and time-phased?

Are requirements well defined and either cross-referenced or flowed down to the control account

level?

Is there joint understanding between the contractor and Government teams on the definition of the

PMB?

The PMB should be reasonably achievable. Some deviation from the baseline is common in almost all

programs. However, when programs deviate significantly from the baseline, the benefits of Earned Value

Management diminish as the number and magnitude of variances grow. Eventually, a time and labor

intensive reprogramming may be required. For these reasons, the PMB should be reasonable and

achievable.

The following questions should be considered in determining if the PMB is achievable:

Are activities sequenced, as the work should be performed?

Are activities sequenced in the most effective and efficient manner?

Are adequate resources planned for the work scope?

Is the schedule margin consistent with the projected completion dates based on the SRA?

Is there adequate cost margin (Management Reserve) for the program risks identified to date?

Is the cost margin consistent with the schedule margin?

Is there cost margin beyond the risks identified to date?

Have rolling wave planning approaches limited detailed visibility of critical program events?

Are there peaks and valleys in staffing profiles that may be difficult to achieve?

Are the management processes in place adequate to identify risks / opportunities in a timely

manner?

Is the PMB consistent with prior contractor performance history?

Integrated Baseline Review Process

26 Version 3.0, 20 September 2012

Do both contractor and Government teams have the same understanding of program risks?

Based upon PMB definition and achievability, the PM makes the final decision to approve the PMB.

Approval of the PMB is not the same as IBR completion. The closure of the IBR is discussed in the

following section.

When the PMB is approved, normally the PM completes a Memo for the Record (MFR) to document that

the PMB has been approved. This additional documentation is appropriate since the PMB is manifest in a

wide variety of artifacts, some that are not approval deliverables. Section 6.14 contains a sample MFR.

The MFR may also address the closure of the IBR if the timing of the PMB approval and the IBR closure

are identical.

3.5.4. IBR Closure – Criteria and Documentation

The IBR is considered closed when all the accomplishment criteria for the IBR Program Event are met as

defined in the IMP. Normally the last item to be completed is the closing of any critical action items.

Critical action items are steps that must be completed to satisfactorily define the PMB, adjust the PMB to

make it achievable, or further define any potential risk events that are not yet included in the Risk

Register. Action items of less severity than critical may be categorized as “major” or “minor.”

At the IBR Exit Briefing conclusion, the action items have been reviewed and a schedule for the

completion of all open actions has been agreed to by the IBR Government and contractor Team.

Depending upon agreement between the DCMA Contract Management Office (CMO) and the

Government PMO, the local CMO may be responsible for monitoring the subsequent closeout of the

remaining actions (those not deemed critical action items). A monthly report updating the actions is sent

to the Government PMO. Upon completion of the actions, the local CMO documents the closeout in the

form of a report to the Government PMO. Alternatively, the Government PMO may monitor the closeout

of the remaining action items. In either case, the DCMA and Government PMO should maintain close

liaison until all IBR action items are complete.

Integrated Baseline Review Process

27 Version 3.0, 20 September 2012

4. Detailed / Specific IBR Procedures

This section contains specific IBR procedures for topics that cut across the chronological description of

activities contained in Section 3.

4.1. Organizing the Air Force IBR Team

This incremental IBR process places different requirements on personnel than a compressed single IBR

event. It is advisable to have a core Air Force IBR Team that can devote a portion of their time to the IBR

from contract award through IBR closure. As the IBR progresses, other personnel can be included for

document quality evaluations, integration traces, and CAM discussions. The core team should minimally

include the IBR Integrator and representatives from Engineering, Finance, and Program Management

organizations. There may be consideration given to calling in advisors or subject matter experts (SMEs)

such as contracts, logistics and risk to augment the team where needed. Ideally, the core team members do

not rotate out during the IBR period to maintain continuity. It may be worthwhile for the contractor to

organize with an IBR core team.

4.2. Scoping the Performance Measurement Baseline

Scoping of the PMB, that is defining which portions of the contract that will be applicable to the IBR is

essential. PMB scoping needs to be done before artifact quality checks and artifact integration

evaluations began. The IBR team needs to have a sound understanding of the scope of the PMB as well

as the timing for the development of the PMB at the IBR evaluation level.

During the RFP preparation phase of the acquisition, the Government PMO will have a reasonable idea

how the program contract will be structured. This will certainly be clear at the prime contractor level.

The CLIN structure will define the portions of the contract that are applicable to Earned Value

Management. This will become the primary selection method for scoping the PMB. CLINs that are cost

plus or fixed price incentive types typically become part of the PMB.

When Earned Value Management is flowed down to the subcontractors, the basic scope of the PMB is

unchanged but the timing of its definition may be extended. Consider the example below:

Prime $10M

FFP CLIN

Prime $80M

FPIF CLIN

Prime $20M

T&M CLIN

Sub A $10M

FFP

Sub B $15M

FPIF

Sub C $20M

FPIF

Sub D $10M

T&M

Prime CA Sub A CA Sub B CA Sub C CA Sub D CA

January February March April May

Sample PMB Scope

Integrated Baseline Review Process

28 Version 3.0, 20 September 2012

In the example above the prime contractor has three CLINs. Only the FPIF CLIN is applicable to EVM

and subsequently Earned Value Management. Beside the prime contractor’s direct efforts, there are four

subcontractors. Those subcontractors that are under the FPIF CLIN and meet the EVM thresholds will

require an IBR. Subcontractor C would be expected to have an IBR as well as the prime contractor. The

Prime contractor’s scope would be the entire 80M of effort in the FPIF CLIN (even though subcontracted

efforts are FFP). Subcontractor C would have an IBR covering the 20M FPIF effort.

4.3. Training Throughout the IBR Process

IBRs are infrequently performed activities. As a result, training is required for personnel participating in

an IBR. Not all participants are available throughout the IBR period so some training sessions may have

to be offered multiple times. Three distinct training sessions are appropriate. The first session orients

Government and contractor personnel to the IBR process and provides an EVM refresher. The second

session informs attendees about the specifics of Phase I and prepares the joint IBR team for those efforts.

This is normally a joint training session. The final session prepares the team for Business Office and

CAM discussions. The attendance necessary for this session includes Government and contractor

personnel participating as part of the IBR team in the discussion sessions.

An outline of recommended subject matter for each session is shown below. Note that some material is

repeated, as personnel may be joining the IBR team as the process unfolds.

IBR Orientation and EVM Refresher Training

o EVM Basics Refresher

EVM Background

WBS

Scheduling

Budgeting

EV Methods

EV Measurements

EV Reporting

o IBR Requirements / Timeline

o Overview of IBR Process

o IBR Roles / Responsibilities

o IBR Schedule / Next Steps

IBR Phase I Training

IBR Timeline

Overview of IBR Process

Integrated Baseline Review Process

29 Version 3.0, 20 September 2012

IBR Roles / Responsibilities

Phase I Activities

o Artifact Quality Evaluations

o Integration Traces

Phase I Recording / Reporting / Scoring

Action Item Identification and Management

Readiness Review Preparation

IBR Phase II Training

IBR Timeline

Overview of IBR Process

IBR Roles / Responsibilities

Phase II Activities

o Business Office Discussions

o CAM Discussions

Preparations

Rules for Conduct

Phase II Recording / Reporting / Scoring

Action Item Identification and Management

IBR Exit Briefing Preparation

4.4. Artifact Adjustment During the IBR Process