CRL Mapping the Newspaper Industry 1 | Page

P RESERVING NEWS IN THE DIGITAL

ENVIRONMENT: MAPPING THE

NEWSPAPER INDUSTRY IN TRANSITION

A Report from the Center for Research Libraries

April 27 , 2011

CRL Mapping the Newspaper Industry 2 | Page

This report is an initial attempt to map the “lifecycle” for news content and information published in

newspapers and online; and to clarify the relationship between the news content produced for those

two major distribution channels.

The report includes two sections:

1. Background and methodology

2. An overview of news workflow and systems, organized around the three major stages in the

lifecycle of news information

CRL will continue to refine and update the maps and lifecycle information contained herein, adding

detail, particularly on technical processes that, because of the proprietary nature of much of the

technology, we were not able to obtain within the timeframe of the study.

Contributors to this report were:

Jessica Alverson

Kalev Leetaru

Victoria McCargar

Kayla Ondracek

James Simon

Bernard Reilly

Illustrations were produced by Eileen Wagner, Eileen Wagner Design, Chicago IL.

CRL Mapping the Newspaper Industry 3 | Page

TABLE OF CONTENTS

Section 1 Background and Methodology 4

Section 2 Overview of News Workflow and Systems 6

A. Sourcing News Content 8

A.1.1 Submission and Ingest of Content 12

A.1.2 Photographs and Other Non-text Media

17

A.2 Normalization and Editorial Markup of Text and Images

22

B. Editing and Producing the News 23

B.1 Production Systems 25

B.1.2 “Pagination” or newspaper Design and Layout

28

B.3 Archiving and Digital Asset Management

34

B.3.1 The Major Digital Asset Systems 35

B.3.2 Digital Asset Management Processes

36

C. Distributing News Content 39

C.1 Print Distribution

41

C.2 Distribution through Electronic Facsimile (E-facsimile) 43

C.3 Distribution through Text Aggregators

46

C.4. Distribution via the Web 51

C.4.1 General Differences between Print and Online

53

C.4.2 Variable Lifespan of Web Content 55

C.4.3 Sourcing Web Content

56

C.4.4 Web Production System and Workflows

58

C.4.5 Secondary Web Distribution Networks 64

Appendix A

Chicago Tribune Content Velocity Analysis, by Kalev Leetaru

66

CRL Mapping the Newspaper Industry 4 | Page

SECTION 1: BACKGROUND AND METHODOLOGY

The decline of the newspaper industry, combined with the ascent of digital media for news reporting

and distribution, means that merely preserving newspapers and traditional broadcast media will no

longer ensure future access to a comprehensive journalistic record. News production is no longer the

periodic, linear process with a single, fixed output that it was in the era of the printed newspaper. It is

now a continuous loop of news gathering, processing, versioning, output, response, and update.

Therefore devising effective strategies for preserving news in the electronic environment requires an

understanding of the “lifecycle” of news content.

Fundamental changes in this lifecycle are now occurring as major newspapers re-orient their operations

and distribution channels from paper and printing to digital environments and platforms. How the news

that appears in newspapers and on the Web is sourced and reported, how it is edited and processed,

and how and in what forms it is distributed must all be taken into account when framing library action to

prevent loss of this important class of historical evidence.

The Library of Congress has a vested interest in addressing this challenge. LC has been a key link in the

newspaper supply chain for the major US academic and independent research libraries. This role is tied

to LC’s copyright registration, acquisition and microfilming operations, and to its overseas operations.

Today, understanding what processes and formats play a role in typical digital production workflows

might provide the basis for a new approach to preserving electronic news at a national level

The present report grew out of a workshop convened by the Library of Congress National Digital

Information Infrastructure and Preservation Program (NDIIPP) in September 2009 to explore possible

strategies for collecting and preserving digital news on a national basis. For purposes of discussion at

that forum LC defined digital news to include, at minimum, “digital newspaper Web sites, television and

radio broadcasts distributed via the Internet, blogs, pod casts, digital photographs, and videos that

document current events and cultural trends.”

Prompted by those discussions CRL proposed to examine, analyze, and document the flow of news

information, content, and data for four major newspapers from production and sourcing, through

editing and processing, to distribution to end users. Four newspapers provided a test bed for the

project: The Arizona Republic, Seattle Post-Intelligencer (since 2008, seattlepi.com), Wisconsin State

Journal, and The Chicago Tribune. The test bed newspapers were chosen to provide a variety of types of

newspapers that represent a broad segment of the U.S. newspaper industry and its publishers. The

report also draws from CRL analysis of three other news organizations: The New York Times, Investor’s

Business Daily, and the Associated Press.

CRL Mapping the Newspaper Industry 5 | Page

Our study addressed the following points of interest:

1. The nature of the electronic facsimile: LC was particularly interested in determining how one

particular news format, the “electronic facsimile” of the printed newspaper, might be exploited

for copyright registration and archiving purposes.

2. The relationship between Web and print news "coverage”: LC wanted to understand how

content posted to each newspaper’s Web site compares with the contents of the paper edition.

3. Technical formatting and delivery of electronic news output: To what extent are there extant or

emerging industry technical standards for formatting, managing and/or disseminating news

content? And what is the range of current practices for formatting and encoding news for

distribution in print and on the Web?

Section 2 of this report provides an overview of news sourcing, production and distribution based on the

practices of the test bed newspapers. Note is made where an individual newspaper deviates

significantly from this generalized narrative.

The authors hope that this report can help pinpoint the potential “high-impact point of entry” in this

workflow where libraries and other memory organizations can capture critical news content and

metadata and ensure the long-term survival and accessibility of the American journalistic record.

CRL Mapping the Newspaper Industry 6 | Page

SECTION 2: AN OVERVIEW OF NEWS WORKFLOW AND

SYSTEMS

The production processes and workflows set up to produce newspapers in print are still very much at

the center of the operations of news organization like the Arizona Republic and Chicago Tribune. These

activities have always been built around daily and weekly news delivery cycles. Broadcast, and more

recently the Web, however, has made possible a continual, accelerated flow of information and news,

which is now approaching real time reporting. As a result the lifecycle of news is changing profoundly.

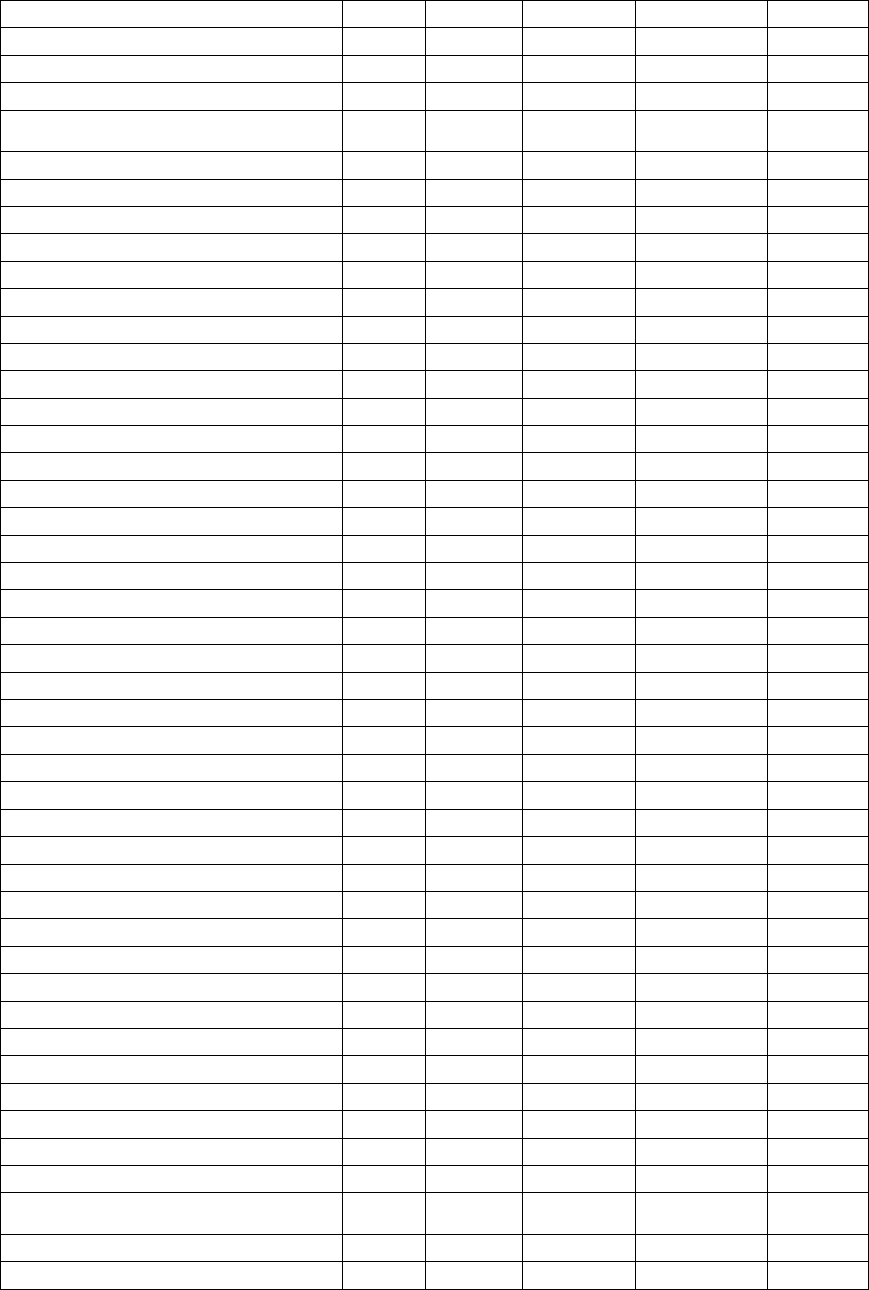

For purposes of the report we characterize the stages of this lifecycle as:

A. Sourcing: the gathering of news information and content by the news organization from

those who create, report, and/or own that information and content;

B. Editing and production: the editing, processing, and enhancement of news content and

information, and preparation of outputs for distribution through various media.

C. Distribution: dissemination and exposure of news content, and products and derivatives

thereof, through print and online media.

Essential to each stage of this lifecycle are automated systems deployed to produce, modify, and

annotate content and prepare the products of the newsgathering process. The major systems involved

are editorial, digital asset management or archives, pagination, and Web production systems. In

addition third-party providers, working in tandem with news publishers, employ their own systems to

produce and deliver news content through the publisher’s Web and print channels.

CRL Mapping the Newspaper Industry 7 | Page

CRL Mapping the Newspaper Industry 8 | Page

A. SOURCING NEWS CONTENT

The contents of a given newspaper issue or news Web site are produced by a host of individuals and

organizations. Some content is produced by reporters, photographers, columnists, illustrators, and

others under the auspices of the newspaper publisher (local content). Other content is supplied by wire

services, photo agencies, data services, syndicates, affiliated newspapers, independent or freelance

producers, and by parent organizations of the newspaper publisher (syndicated content). The main

difference between sourcing for the print newspaper and for the Web news site is that for the latter

considerably more content, and more processing of that content, is supplied by third party commercial

service providers, such as advertising agencies or “ad servers,” and Google Earth (third-party content).

Content/data:

• News wire reports

• Article, feature texts

• Graphics

• Photographs

• Audio recordings

• Video recordings

• Tables

• Databases

• News object metadata

• User data

• Algorithms and programming code

Actors:

• Standards organizations (such as IPTC, NAA)

• Newspaper publishers (Arizona Republic, Seattle Post Intelligencer, the Tribune Company, Wisconsin State

Journal)

• Parent organizations (Gannett, Hearst Newspapers, Tribune Media)

• Affiliated newspapers and news organizations (print, broadcast)

• Editors

• Staff reporters, photographers, columnists, illustrators, cartoonists

• Independent, freelance reporters, photographers, columnists, cartoonists/artists

• Wire services (AP, Agence France-Presse)

• Syndicates and other content providers (King Features, Washington Post Writers Group, TV Guide)

• Photo agencies (Corbis, Agence France-Presse)

• Data and polling services (Dow Jones, National Weather Service, Nielsen Company, Major League Baseball)

• Advertising agencies

• Ad and data servers

CRL Mapping the Newspaper Industry 9 | Page

In addition news websites, more than newspapers, make use of information and other content

provided by readers (user-created content) either directly through web platforms like blogs, polls, and

other forums controlled by the news organization, or indirectly through third-party social media sites

like YouTube, Twitter, and FaceBook. This proliferation and diversification of sources and ingest

channels presents complicated workflow and rights management challenges to news organizations, and

to those who seek to preserve news.

CRL Mapping the Newspaper Industry 10 | Page

A.1 SUBMISSION AND INGEST OF CONTENT

Content is submitted to newspaper publishers by a multitude of individuals and organizations, under

varying sorts of arrangements with the publisher. These include reporters and photographers, wire

services, advertisers, and syndicates. Content is submitted by these parties directly to the newspaper

publisher in a number of ways:

• Mail, courier and in-person delivery of hard copy and digital copy on portable media from

reporters, photographers, columnists, artists, readers, and other contributors

• Email text and attachments

• Input of locally produced content to publisher’s production system directly by reporters,

photographers, columnists, artists, and other authorized contributors

• FTP from syndicates and other proprietary suppliers

• Web download from syndicates and other proprietary suppliers

• Web upload through blogs and comment postings on the publisher’s web site.

CRL Mapping the Newspaper Industry 11 | Page

Some publishers permit citizen journalists and readers to submit news stories and photographs by email

and direct Web upload. The direct upload systems normally have a submission form and an SQL back-

end for data storage. User-supplied content is often mediated by social media developers like Pluck,

which screens and filters reader comments submitted to The Arizona Republic and other Gannett

newspaper Web sites. (Pluck checks for abusive comments made in discussion forums, story chats and

blogs on a 24/7 basis.)

Commercial advertising services and direct mail houses deliver page image files either to the publisher’s

production system or directly to the publisher’s printer. Some third-party data and content providers,

such as advertising, weather, and financial reporting services simply deliver URLs, Java Scripts and

metadata to the newspaper publisher’s production system that then “point to” images, videos, and

other multimedia content residing on the provider’s own specialized media servers.

CRL Mapping the Newspaper Industry 12 | Page

A.1.1 SUBMISSION OF TEXT CONTENT

News text submissions to newspapers come in two general forms:

1) News and syndicated text feeds – Brief text reports of breaking news produced by syndicates

and wire services like the Associated Press, Reuters; and discrete items like articles, columns,

editorials and other writings produced by syndicates, other news organizations, and providers.

2) Locally produced text – Discrete articles, columns and other written texts, in a form intended for

publication as stories, columns, letters, blog posts, or other element s of the newspaper or

website.

Most editorial systems accept these texts in a variety of proprietary and open file formats, including

plain text (.txt), Unicode UTF-8 (.utf8), rich text format (.rtf), Word (.doc and .docx), ASCII (.ascii), and

email (Outlook .msg is the most common). Older news production systems like NewsDesk accept texts

only in rich text (.rtf) or plain text (.txt) formats.

A.1.1.1 NEWS AND SYNDICATED TEXT FEEDS

Wire services, syndicates, and other providers of texts to newspaper publishers transmit bundles or

packages of texts (feeds) that can consist of a single brief news report or a structured story or column.

These texts are normally rigidly structured and transmitted to publishers in one of a number of standard

markup formats designed specifically for news interchange. These markup formats include older

standards like IPTC 7901

1

and ANPA 1312

2

1

IPTC 7901was one of the first news text exchange formats, introduced by the International Press

Telecommunications Council (IPTC) in the early 1980s but is still in use by some wire services and news

organizations. It was intended to facilitate international exchange of news reports, and takes into account

technical and linguistic differences between countries and accommodates numerous alphabets.The format

structures text transmissions into four sections: pre-header information, a message header, message text, and

post-text information. These format elements are separated by control characters: SOH (start of header), STX or

ETX (start of text), and terminated by EOT (end of text). More on IPTC 7901 at:

http://www.iptc.org/site/News_Exchange_Formats/IPTC_7901/

and newer XML-based formats, including:

2

ANPA-1312 is a 7-bit news agency text markup protocol designed to standardize the content and structure of text

news articles. It was created in the late 1970s by the American Newspaper Publishing Association (now the

Newspaper Association of America),. Last modified in 1989 it is still a common method of transmitting news to

CRL Mapping the Newspaper Industry 13 | Page

• News Industry Text Format (NITF), for text news items and articles

3

• NewsML, for text, photo and multimedia news content, i.e., packages of text with accompanying

photographs, databases, video, audio and other media

4

• SportsML, for transmitting sports scores and data, and

• EventsML, a standard for event-related news information.

IPTC 7901, ANPA and NITF are exchange formats originally developed for structuring newspaper text

content for transmission, and are used in marking up simple news item and article texts only. They are

descendant from the codes developed by the Associated Press for delivery of text via dedicated

newswire networks. The Associated Press coding is shown in the annotated text illustrated below.

newspapers, web sites and broadcasters from news agencies in North and South America. Using fixed metadata

fields and a series of control and other special characters, ANPA 1312 was originally designed to feed text stories

to both teleprinters and computer-based news editing systems.

3

News Industry Text Format (NITF), developed by the International Press Telecommunications Council (IPTC) in the

1990s, uses XML to define the content and structure of news items and articles. Because NITF metadata is applied

throughout the news content, NITF documents are far more searchable and useful than HTML pages. NITF enables

publisher systems to automate formatting of their documents to suit the bandwidth, devices, and personalized

needs of subscribers. NITF-encoded documents can be translated into HTML, WML (for wireless devices), RTF (for

printing), and other common formats used by news publishers. For further details on NITF see:

http://www.iptc.org/site/News_Exchange_Formats/NITF/Introduction/

4

NewsML was first developed in 1999 and updated in 2008 to “represent and manage news throughout its

lifecycle.” It was designed to provide a media-type-independent, structural framework for multi-media news.

Beyond exchanging single items NewsML can also convey packages of multiple items in a structured file. It

supports the representation of electronic news entities such as news-items, parts of news-items, collections of

news-items, relationships between news-items and metadata associated with news items. For further details on

the NewsML schema see: http://www.iptc.org/std/NewsML/1.2/specification/schema-

doc/NewsML_1.2.html#Link06456060

CRL Mapping the Newspaper Industry 14 | Page

NewsML™ and APPL (Associated Press Publishing Language) are newer, media-type agnostic news

exchange standards that enable news organizations to provide a wealth of descriptive, structural and

administrative information on transmitted news objects (e.g., reports, articles, photographs) that can be

read by automated production systems at recipient newspapers. They enable production systems, for

example, to manage articles, photographs and other content over time by providing information on

rights status (publishable, embargoed, etc.) and information on authorship and copyrights (source,

credit line, terms of use, etc.). They also enable news agencies and publishers to generate the same text

in multiple languages; video clips in different formats; and the same photograph in different resolutions.

(AP delivers news items in ANPA, NITF and APPA formats.)

5

5

The IPTC web site contains a wealth of information and vocabularies on the various text markup formats, at

http://www.iptc.org/cms/site/index.html?channel=CH0112

CRL Mapping the Newspaper Industry 15 | Page

The XML-structured data file for an NITF or NewsML news item includes tags that identify the various

components of an item text, such as headline, byline, dateline, lead, body text, column headings, and so

forth. The file can also incorporate additional tagged administrative information, such as level of

urgency, embargo period, release date, version date, usage rights, and references to accompanying

images. For example, a version of an article originally reported on November 25, 2008 but updated in

2010 would have the following

NITF date tags:

<date.issue norm="20081125T143000-0500" />

<date.release norm="20100226T093000-0500" />

And the following

NewsML date tags:

<contentCreated>2008-11-25</contentCreated>

<versionCreated>2010-10-19T16:25:32-05:00</versionCreated>

Normally the XML tagging will also include general news categories (“business,” “weather,” “sports,”

etc.), relevant geographical location(s), and subject keywords.

CRL Mapping the Newspaper Industry 16 | Page

XML-structured content enables the automation of some processes within a newspaper’s production

system. Most production systems are designed to read the XML tags and to translate the structured

data into functionality on various platforms. For example, event-related data structured in EventsML

interacts with style sheets and templates in Web-oriented editorial systems like NewsGate and web

production systems like Nstein’s WCM to generate -- and automatically update -- event listings on the

news Web site, and even to “purge” those listings when the embedded “end” or expiration dates arrive.

Although the major news content sources employ their own proprietary variations on the standard IPTC

tags and codes (Reuters, for instance, has its own “flavor” of NewsML) , the major editorial systems in

use by the newspapers are able to translate most of these variations to their local codes automatically,

through the use of profiles developed for each major news source. Other newspapers use third party

data formatting services like Fingerpost to convert wire service article texts to their own preferred

format before ingest.

6

(The Chicago Tribune uses Fingerpost to convert wire service feeds to ANPA

format.)

A.1.1.2 LOCALLY PRODUCED TEXT

Newspaper production systems accommodate unstructured texts as well. Locally produced text, i.e.,

text produced by staff or assignment writers, reporters, columnists, editors, and others is often created

in common word processing applications and then uploaded by the authors or editorial staff directly to

the newspaper’s production system. In some cases article and feature texts are authored within the

production system environment itself, using Microsoft Word or comparable, system-proprietary word

processing software.

6

Fingerpost, a UK-based software producer produces Fingerpost Information Processor (FIP), a service for

http://www.fingerpost.co.uk/

Therefore, at minimum, texts transmitted by news agencies and wire services using IPTC/ANPA

codes include information regarding the transmission standard used, date and time of

transmission, author (if a feature or article), source of transmission, urgency of information, word

count, and general news category. Texts marked up in newer, XML formats are more richly

tagged and often include document type, rights and release-related information, version

information, subject key words, date and place of origin, background on the story or image,and

references to accompanying materials.

CRL Mapping the Newspaper Industry 17 | Page

For these texts the production system generates a certain minimal amount of metadata on ingest, such

as assignment ID, author name, usage rights, date and time, general news category, and urgency, as well

as subject information and references to accompanying materials such as photographs and charts.

A.1.2 PHOTOGRAPHS AND OTHER NON-TEXT MEDIA

Individual producers and contributors of image and multimedia content to newspapers employ a variety

of digital capture and recording devices. These include:

o Digital still photo cameras

o Video cameras

o Digital audio recorders

o Hybrid still/video cameras

o Cameras built into cell phones and PDAs

These devices employ a variety of proprietary and open formats and applications. Still photo and

graphic image file formats accepted by the major news production systems include: JPEG, JPEG2000,

TIFF, RAW, EPS, PNG, GIF, and PDF as well as numerous proprietary formats.

7

7

According to Jon Swerens, The News Sentinel’s CCI Cheat Sheets, the NewsDesk editorial system accepts images

in the following formats:

Adobe Photoshop, Apple PICT, AWD Fax Format, BMP, Canon Raw Format

(CRW), Casio Digital Camera, Commodore-Amiga IFF, Computer-aided Acquisition and Logistics

Support (CALS), DCX, FAX (Brooktrout, LaserData and WinFax), FlashPix, Foveon X3F, FujiFilm

FinePix S2, Pro RAF, Graphics Interchange Format (GIF), JPEG, JPEG 2000, Kodak Photo-CD, Minolta

MRW, Kodak Professional Digital Camera, Leica Digilux 2 RAW, Nikon NEF, Olympus ORF, Paint

Shop Pro Image, Panasonic DMC-LC1 RAW, Pentax *ist D PEF, Portable Bitmap, Graymap, and

Pixelmap (PBM, PGM, PPM), PCX, Portable Network Graphics (PNG), Psion MultiBitMap, Raw

Format, Seattle Film Works, SGI Image File, Sony DSC-F828 SRF, Sony Digital Camera, Sun Raster,

TARGA, TIFF, WAP WBMP, Windows Icon, Windows Metafile, Windows XP Thumbs.db, X Bitmap,

X PixMap, X Windows Dump.

Newer newspaper

Therefore, at minimum, locally-produced texts submitted to, or authored within, a newspaper’ s

editorial system will include information regarding the date and time of ingest, assignment or

article ID, author, urgency of information, word count, and general news. Some texts are more

richly tagged, with rights and release-related information, version information, subject key words,

date and place of origin, and references to accompanying materials.

CRL Mapping the Newspaper Industry 18 | Page

content management systems, designed for Web production, also accommodate multimedia file

formats such as WAV, MP3, Flash, MPEG3, others. In many instances (the Chicago Tribune, for example)

content in video, audio and other multimedia formats bypass the newspaper’s editorial system and is

ingested directly into the Web production system.

A.1.2.1 PHOTO METADATA AND MARKUP

Today most photos are submitted to newspapers electronically, or are scanned and ingested

electronically from hard copy. Staff and freelance photographers, wire services, and photo agencies

include IPTC-fielded metadata with their electronic submissions. Some of this information is generated

automatically by the capture device (cell phone, camera). More is added during the “post-camera”

processes, such as editing in PhotoShop and still more in the “picture desk” and digital asset

management systems used by the syndicates and photo agencies.

There are several generations of standards for representing data about photographs. The principal

standard for data about photographs, graphics and other images in wide use in the news industry since

the 1990s was the Information Interchange Model (IIM). IIM is still widely used for photographs today.

CRL Mapping the Newspaper Industry 19 | Page

IIM was created by the IPTC in 1991 as a set of metadata for digital text, photos, graphics, audio, and

even digital video streams. IIM enables the transfer of these data objects between computer systems,

providing an envelope around the object containing information on the type of content or document,

file format, transmission date and time, etc. IIM metadata elements are known as "IPTC fields" in the

"IPTC header" of digital image files. Some IIM metadata is automatically applied to photographs by

digital cameras.

Exif is a multimedia metadata format standard adopted by most manufacturers of digital cameras to

embed JPEG, TIFF and RAW format images with technical information. Exif metadata can include such

information about the image as the make and model of the camera used, settings, timestamp, GPS

location, photographer ID, and even face recognition details, as well as “color space” information such

as RGB, Adobe RGBplus, orientation (“landscape” or “portrait”), and maximum available size of the

image in pixels. Additional IIM metadata can be applied by photographers to their images “post-

camera,” in PhotoShop, FotoStation, Extensis Portfolio, and other widely used image management

software.

CRL Mapping the Newspaper Industry 20 | Page

The various IPTC image metadata standards specify the types of information that can be included in

specific fields in the IIM envelope for a digital object and identify the numerical and controlled

vocabulary tags for those fields. IIM numerical tags identify such categories of information as the file

format of the photograph (1:20), priority (2:10), key words (2:25), datelines (2:55), and author (2:80);

and IIM symbols for general types of content, such as arts, culture and entertainment (ACE), disasters

(DIS), health (HEA), etc., aid in directing the image to the appropriate newspaper “desk.” Within these

broad categories number codes designate more specific subjects, as fashion (01007000), earthquake

(03002000), labor disputes (09004000), etc.

8

Within the IIM fields there is considerable variation in the vocabularies used. The IPTC gives general

directions that allow providers certain amount of latitude, such as “Not repeatable, Maximum 32 octets,

consisting of graphic characters plus spaces” for the geographic source of a transmitted photograph or

audio-recording. So codes representing the source of the transmission could be either “PAR-5” or “PAR-

12-11-01,” the latter incorporating the November 12, 2001 date of a photograph transmitted from the

AP’s Paris bureau.

IIM metadata is most often stored in the image file itself. To the metadata generated automatically by

digital cameras, photographers and editors can add more metadata in the “post-camera” stage, i.e., in

the course of image processing with software such as Adobe’s Photoshop. In 1995 Adobe adopted IIM as

a format for metadata in its Photoshop product. Hence many of the IIM fields map directly to metadata

fields in Adobe, Apple and other image management software.

9

8

The 2007 IPTC white paper provides a great deal of information on the IIM metadata schema and its use with

press and agency photographs, International Press Telecommunications Council Photo Metadata White Paper

2007, at:

http://www.iptc.org/std/photometadata/0.0/documentation/IPTC-

PhotoMetadataWhitePaper2007_11.pdf

.

9

IIM tags include descriptive metadata (e.g., genre, headline, and subject information); administrative information

(unique file ID number, title, date and place created, job ID, instructions like embargo, caption author); rights

(photographer, and job title credit line, copyright line, rights contact, releases, usage rights, provider); and

information on metadata schemas (such as IIM or the updated IPTC core scheme for XMP PLUS, regarding picture

licensing rights; and more robust DIM2) For further detail on IIM, see the IPTC Web site at

http://iptc.cms.apa.at/site/News_Exchange_Formats/IIM/

CRL Mapping the Newspaper Industry 21 | Page

Today many syndicates and photo agencies provide more richly tagged photographs in an XML wrapper,

usually using NewsML. NewsML provides much richer encoding possibilities for digital photographs as

well as news texts and multimedia. NewsML formatted information for a photo transmitted by an

agency could include metadata marked up as follows:

<usageTerms>NO ARCHIVAL OR WIRELESS USE</usageTerms>

<versionCreated>2010-10-19T02:20:00Z</versionCreated>

<pubStatus qcode="stat:usable" />

<urgency>4</urgency> [“1” indicates the most urgent]

<fileName>USA-CRASH-MILITARY_001_HI.jpg</fileName>

<headline xml:lang="en-US">A firefighter walks past the remains of a military jet that

crashed into homes in the University City neighborhood of San Diego</headline>

<description xml:lang="en-US" role="drol:caption">A firefighter in a flameproof suit

walks past the remains of a military jet that crashed into homes in the University

City neighborhood of San Diego, California December 8, 2008. The military F-18

jet crashed on Monday into the California neighborhood near San Diego after the

pilot ejected, igniting at least one home, officials said.</description>

<creditline>Acme/John Smith</creditline>

Therefore, a photograph transmitted from a photo agency or a photographer to a given

newspaper will, at minimum, normally include information about its authorship, source, technical

characteristics, subject matter, urgency, and general terms of use. It might also

include, however,

an abstract or descriptive information, version history, and related media objects.

CRL Mapping the Newspaper Industry 22 | Page

A.2 NORMALIZATION AND EDITORIAL MARKUP OF TEXT AND IMAGES

Editorial systems and digital asset management systems normally convert photographs on ingest to

JPEGs. JPEG is the most widely used format for newspaper photos and all major editorial, digital asset

management, pagination, and Web production systems accommodate them. Graphic images are

normalized to EPS (Encapsulated PostScript) format, which is also a standard format used in print

production.

Once within the editorial system texts and images are normally assigned additional metadata, using

proprietary file formats and tags that generally correspond to IPTC subject codes and terms, augmenting

the information originally attached to the object by wire services, reporters and other providers. At this

point in their lifecycle news texts and photographs are highly structured in ANPA or XML and are heavily

annotated with fielded information. For example, text received by The Chicago Tribune from the

Associated Press may arrive with broad, standard IPTC subject category designations in the metadata

(“news,” “sports,” “business,” etc.) and general keywords like "Illinois," "politics", "governor." Tribune

editors then enrich this metadata by adding more specific, locally relevant keywords, like “Cook

County,” “corruption,” and system-generated elements such as article ID, assignment number,

references to related news objects, etc., and even descriptive abstracts.

However, as the story or photograph moves toward formatting for print, web and other platforms and

operating systems and integration with other elements of the issue or Web feed, it is gradually stripped

of much of that information.

CRL Mapping the Newspaper Industry 23 | Page

B. EDITING AND PRODUCING THE NEWS

Editorial or production activities, as we discuss them here, are the processes and tasks that take place

under the auspices of a newspaper publisher or its parent organization, to produce a printed edition, a

Web site, or other electronic derivatives of same, and to manage the content needed to support those

activities. These activities involve the review, revision, editing, formatting, markup, and tagging of the

sourced content and the assembly of a newspaper’s print edition from that content, formatting of

content feeds to text and electronic facsimile aggregators, and the development and formatting of

content for the newspaper’s Web site. These activities are augmented by services provided by other

organizations, including printers, advertisers, and data providers that also produce content and data for

a publisher’s print and Web output.

Content/data:

• Article, feature texts

• News wire reports

• Graphics

• Photographs

• Audio recordings

• Video recordings

• XML text and metadata

• Page images files

• Databases

Actors:

• Newspaper publishers (Arizona Republic, Seattle Post Intelligencer, the Chicago Tribune)

• Parent organizations (e.g., Gannett, Hearst Corporation, Tribune Media)

• Affiliated newspapers and news organizations

• Editors and newsroom managers

• Photo editors

• Media organizations, syndicates (e.g., News Corporation, Gannett, Hearst, Tribune)

• Associations and standards bodies (i.e., IPTC, NAA, ISO)

• Designers, layout artists

• News archivists

• Programmers and system designers

• Software producers (Adobe, Microsoft)

• System vendors (CCI, DTI, Nstein)

• System managers and hosts

CRL Mapping the Newspaper Industry 24 | Page

In the newspaper era every newsroom functioned in essentially the same way. More than two centuries

of practice in the US and the industry’s dependence upon a small number of major syndicates (AP, UPI,

the New York Times, Magnum, Dow Jones) for news gathering created a certain uniformity of practice.

This uniformity applied to the news cycle (daily, weekly), writing style and formats (inverted pyramid,

editorials, and advertisements), and conventions in placement of masthead, headlines, bylines, article

text, and other page elements (multiple columns, sections, above and below the fold. Many of these

processes went the way of the afternoon newspaper as the Web emerged as a primary platform for

news access. But the effect of the print-era conventions is still apparent in the organization of the

electronic newsroom. As Victoria McCargar writes:

Newsroom technology . . . uses metadata schemes that reflect these structures, and something

approaching a standard is central to virtually all news production. In part, this reflects the

dominance of wire-service protocols that date back decades and have evolved along with

technology but still maintain these basic structures.

10

In the digital environment current practice for formatting and encoding news for print and Web

distribution is shaped by a handful of industry standards and by a legacy of proprietary local systems and

processes. Media convergence and consolidation in the news industry, however, is creating even more

uniformity of practice today. As local newspapers are acquired by larger media groups, many

production practices are being dictated by the home office. And back-office operations are being

combined through implementation of enterprise-wide systems that provide common platforms for

managing text, still image, video, audio, and database content.

This drive to uniformity is enabled by the networked nature of digital technology, which is changing the

balance between internal and outsourced processes, and local and centralized control of those

processes.

At the same time developments in media technology are giving rise to wider variety of derivative news

products, which in turn makes the production process newly complex. In fact, not all content that ends

up in the newspaper or on the newspaper’s Web site even travels through the publisher’s editorial

system. Certain types of content supplied by third parties, such as print advertising inserts and

supplements, are delivered directly to the printer by direct mail and advertising services providers. And

10

Victoria McCargar, Associated Press Repository Profile, Center for Research Libraries, revised January 2011.

http://www.crl.edu/sites/default/files/attachments/pages/AP%20Profile%20final%20doc_3_2011.pdf Accessed

11/12/10.

CRL Mapping the Newspaper Industry 25 | Page

certain multimedia content and data feeds for Web display reside only on the services or data provider’s

servers.

Most editorial systems are built around the traditional concept of a news article or story. Following

longstanding newsroom practice most text is maintained in the editorial system as part of a standard

unit of content: it is a news item (for wire service reports) or a story, article, or feature. Photographs

are normally managed either as individual items (from wire services and syndicates) destined to be

linked with a related story or feature, or are bundled as an assignment, for later winnowing by editors.

The richest and most extensive tagging is applied to content during the editorial process. Story text,

photographs and other content are tagged with administrative information on authorship, date, status

and ownership of rights, version, language, level of urgency, place of origin, and so forth. Specialized

and highly localized subject tagging is applied in the editorial system, and abstracts are sometimes

generated as well. Much of this information is later stripped out or lost, as the final published version of

the news product takes shape.

B.1 PRODUCTION SYSTEMS

Most components of the print, Web and electronic facsimile editions of newspapers are produced using

one or more of three integrated systems: an editorial system, an archive or digital asset management

system, and a pagination system.

Today, as news organizations increasingly regard the Web as their primary distribution channel, the

number of systems that can accommodate the complex content management needs of multi-platform

news production is becoming smaller. The industry leaders in producing these systems are CCI Europe,

Nstein, SCC, and DTI. The Tribune Company has implemented CCI’s NewsGate as its editorial system.

The Seattle Post-Intelligencer, along with the other Hearst newspapers, is in the process of

implementing Nstein’s DAM, TCE and Web Content Management systems. These systems integrate the

functions of the legacy print production systems with the capability to format and deliver content to the

Web and a range of electronic devices, such as mobile phones, PDAs, and e-readers.

A notable common feature of the newer systems is that they are often deployed and maintained

centrally by a newspaper’s parent organization, rather in the local newsroom itself. The ability of such

systems to be accessed by reporters, photographers and other content producers via the Web and to

CRL Mapping the Newspaper Industry 26 | Page

ingest content from multiple remote locations means that they need not be instantiated at the local

level. They can also be scaled to handle editorial and production work for several different newsrooms

at once. As some of the systems incorporate administrative functions such as assignments, circulation

management, and billing, they offer advantages to parent organizations that endeavor to increase their

control over local newspaper operations and budgets.

These editorial, archives, and pagination systems tend to use proprietary software, create their own

versions of standard content formats, and employ their own variants of industry standards for content

markup and coding. IPTC codes are used alongside locally developed codes that identify placement in

particular newspaper-specific sections, for instance, or indicate special rights arrangements regarding

news content. Therefore, markup and formatting tend to be uniform only among publishers using the

same production systems.

The individual editorial systems often consist of discrete modules which can be adopted individually and

operated in tandem with existing legacy systems or with systems produced by other system vendors.

The Arizona Republic, for example, employs the NewsDesk editorial system and Layout Champ

pagination system, both produced by CCI Europe, alongside the DC5 archive or content management

system, which was developed by Gannett Technologies. (NewsDesk is one of the older editorial systems

produced by CCI Europe and is still in use at many newspapers.) The Chicago Tribune uses CCI’s next

generation NewsGate integrated editorial and pagination system in tandem with Assembler, its own

Web authoring system. The Wisconsin State Journal uses Advanced Publishing Technology's Falcon

Editorial 4.1.9 combined with Adobe's InDesign (design and pagination) system.

B.1.1 THE EDITORIAL SYSTEM

Systems: CCI NewsDesk, CCI NewsGate, Unisys Hermes, Nstein WCM, APT Falcon Editorial

The editorial system provides writing and editing functions, storage of text news objects prior to

publication, encoding tools, and export of content to pagination and digital asset management systems,

archives and other channels. Content enters the editorial system with what minimal metadata is

supplied by the producer or provider, using standardized International Press Telecommunications

Council (IPTC) codes. (See Section A.1, Sourcing, above.)

CRL Mapping the Newspaper Industry 27 | Page

The text editing component of most editorial systems converts imported text (e.g. structured text from

wire feeds, or word-processed documents submitted by reporters) to Microsoft Word or their own

proprietary version of same. Stories are then composed and edited directly within the editorial system

environment. News texts, which at this stage can be either draft articles or fast-breaking news items or

updates from the wire services, are then copy-edited, marked up and tagged in proprietary SGML/XML

formats, and stored. The tagging codes conform at least loosely to the IPTC standards, in order to

optimize earlier tagging by the wire services and capture or authoring devices.

Once material is ingested, converted, and assigned IPTC metadata, it is sent to appropriate "pools"

within the system. Wire service stories are automatically parsed according to attached metadata, and

filtered into several categories in a "wire basket." Text is also tagged with additional subject keywords

and names, and sent into a "text pool," which is filterable and searchable. The story can grow and

evolve through several iterations within the system. Here take place the beginnings of layout as the

edited text is assigned a preliminary headline, lead, and an article ID, and linked with photographs,

graphs, or other graphic material maintained separately in the digital asset management system.

As in the traditional newsroom, the "story" is a basic unit of content and organizing principle for

editorial activities. From the CCI NewsGate product brochure:

The Story Folder -- the "homepage" of a story in NewsGate -- is the central collaboration area for

everybody in the newsroom contributing to the creation and development of the story. In the

Story Folder editorial teams are formed; assignments are distributed; background information is

gathered; relevant contact information is stored; and content is collected, created, edited and

published. . . . The Story Folder constitutes the central hub for research and story development.

After copy-editing, the texts are tagged with publication-related indicators for layout elements, such as

publication date, section, byline, headline, etc. , as well as administrative information such as

assignment, rights status, level of urgency, etc.

Ads for the print edition are either provided by advertisers in PNG or JPEG format, or are produced in-

house from camera-ready material provided by the advertiser. (Ads for the Web edition follow an

entirely different production path.) Multimedia formats often do not enter the editorial system, but

rather bypass the system entirely and are ingested into the Web production system.

CRL Mapping the Newspaper Industry 28 | Page

Once the copy editors complete their work, the XML article package for the print edition is released to

the pagination system for final layout. The package is also forwarded to the Web production system.

B.1. 2 “PAGINATION” OR NEWSPAPER DESIGN AND LAYOUT

Systems: Unisys Hermes, CCI Layout Champ, DTI Newsspeed

After the articles and other components of a newspaper print edition are assembled and tagged in the

editorial system they are usually exported to a pagination system where the page layouts for the print

edition and e-facsimile edition are created. It is in the pagination system that most of the content for

these editions of a newspaper is brought together for the first time.

At this stage the separate article text elements (headline, dateline, byline, lead, body) and related

images, photographs, and charts are all separate files in the native formats of the generating editorial

system or digital asset management (DAM) system. For text this is usually either a proprietary version

of Word or an XML-formatted text document marked with minimal tags for paragraph and character

idiosyncrasies like italics, diacritics, etc.

The accompanying photographs are low-resolution JPEG files, to be used for positioning only. The

article components are linked through unique article or “news item” ID numbers generated by the

editorial system, which enable them to be assembled by the layout artist. In the pagination system the

article texts and images are then joined “physically” to the other elements and contents of the issue,

such as charts, illustrations, masthead, editorials, columns, advertisements, etc.

Advertisements to appear in the body of print newspapers are often produced in a separate CCI module

called AdDesk, and uploaded to the pagination system. (Printed advertising inserts are produced by

third-party direct mail houses and enter the printing workflow separately. Banner and video

Therefore, at this stage in the production process the content elements (photographs, article

texts, etc.) will have minimal metadata, most of which is information relating to layout of the

print edition. Such metadata will normally include issue date, section, page number, and

references to related content, as well as text structure indicators such as headline, byline, and

dateline. Photographs and illustrations will also include cutline or caption text.

CRL Mapping the Newspaper Industry 29 | Page

advertisements are also produced separately and served directly to the Web by third party providers like

AdTech, Google Ads, Pulse 360, and Brightcove.)

Pagination system software enables designers to lay out individual articles in the form in which they are

to appear on the printed page, determining the article size, shape, type fonts, spacing, arrangement,

and other design elements. These elements display visually within the pagination system environment.

Programmed into the pagination system software are the particular rules and templates that give a

particular newspaper its distinctive look, such as the type fonts and sizes used, ink color palette, number

of columns, etc.

The design tools and templates often segment article or news item components, for example causing

the headline, lead and beginning of an article text to appear on page one and the text to continue

unbroken on an interior page. As one designer explains the process, “You design the article shape then

fill them in with the headline, lead, byline and other article elements.”

The most widely used pagination system is LayoutChamp, which is produced by CCI Europe. (Other

widely used pagination systems are Unisys’ Hermes and DTI’s

NewsSpeed.) Layout Champ is a

proprietary software suite that employs its own native formats. These systems are often used in

conjunction with “typesetting engines” like H&J, TeX and column justification algorithms, and often

integrate with image editing software like PhotoShop and Illustrator, allowing editors and layout staff to

make edits and alterations within the pagination environment. Other page design tools, including Quark

XPress, and Adobe InDesign, are often incorporated with, or used in lieu of, pagination systems.

Once composed, the page files are tagged with codes in the pagination process that indicate the

particular section and page number, with additional information on "zones." This section and edition

coding is not uniform from one paper to the next because many of the section and edition names differ.

(For example, the Tribune produces different sections for different regions of distribution, such as North

Shore, South Side, national, etc., whereas the Arizona Republic’s sections are Scottsdale, Tempe, North

Valley, etc.). The section codes are important to the plate-setting and print production process.

Meanwhile the photographs and graphics selected for use in the edition are processed separately,

normally using PhotoShop or proprietary software of the Digital Asset Management system. Here

CRL Mapping the Newspaper Industry 30 | Page

cropping and color adjustment is done, and the brief cut line is expanded to a full caption. In some

instances the photographs are further enhanced for uniformity. (Gannett operates regional “toning

centers” for this purpose.)

The set of composed page images for each edition of the print issue (e.g., “early,” “final,” metropolitan

and regional) are then saved using Adobe Acrobat, in PDF or Postscript® format. The Tribune and

Arizona Republic prefer PostScript for printing. (PostScript is a predecessor digital image format to PDF,

developed as a language to drive high-quality image and text printing).

Once assembled, the page image files are then often run through a correction and optimization

processor, such as OneVision, which uses one of a number of proprietary software types to enhance the

color balance and consistency and overall image clarity. Image and graphics content, because they

come from a number of different sources, may vary in quality, resolution, and color balance.

There is little uniformity in the metadata embedded in the output page image files from one publisher

to the next and even among multiple titles produced by the same publisher. While Adobe imaging

software does accommodate storage of a considerable amount of metadata, the page image files we

examined had very little information. At best, the metadata actually embedded in these files included:

• File name (e.g., “ARIZONA REPUBLIC front page”)

• File title (“06-20-10A_01-Z0.pdf” for the June 20, 2010 Arizona Republic front page)

• Type of software or service used to produce the PDF (“OneVision PDFengine”,“Acrobat Distiller

Services”)

• Date and time of creation (“6/20/10 3:01:41 AM”,“5/28/2010 4:20:03 PM”)

• Date and time of revision

• Color correction and optimization application used (“Asura version 8.2”)

• File size

• Page size (in inches)

This information roughly maps to the IIM fields developed by IPTC, but the field content is normally not

consistent in structure or in controlled vocabulary.

Often, minimal information is also embedded in the titles of the individual page image files. But this too

is not uniform. One source told us, “When we receive PDFs from publishers they come with file names

set up by the publisher. We get all kinds of different naming conventions for publishers and there could

be multiple naming conventions within a chain of papers which suggests they do not have any standard

CRL Mapping the Newspaper Industry 31 | Page

process.” An illustration of sample file names from different sources, reflecting slightly different naming

schemes, appears below.

In some cases there is also an index file that goes along with the set of page images, to guide the plate-

setters and aggregators in properly sequencing the pages and sections of an issue. We were not able to

determine what these index files look like. But inclusion of these files is not standard practice, and most

sequencing is guided by the “profile” for the particular publication programmed into the plate-setter

system.

The set of page image files for a daily newspaper edition are transmitted nightly to the designated

printing plants. (This transmission normally occurs between midnight and 4:00 AM.) Some publishers

send these files by an FTP feed. Others place them in a “drop folder” in a Web-accessible database,

from which they are then retrieved by the printer’s plate-setter system.

CRL Mapping the Newspaper Industry 32 | Page

B.1.2.1 THE PLATE-SETTING PROCESS

Systems: Agfa’s Advantage, Krause’s LS Performance, ECRM’s Mako and Newsmatic

“Plate-setter” or “imagesetter” systems are used to generate sets of CMYK (cyan, magenta, yellow and

key) printing films or polyester plates for each newspaper page.

11

To administer the printing jobs

printers use a “TIFF-catcher” software, such as CtServer, normally mounted on a PC workstation, which

maintains a list of jobs submitted to the printer’s RIP system

12

in “drop” folders. Each job is associated

in the drop folder with a property set, which is a set of output characteristics, such as margins, cuts size,

and output media type and width, associated with the particular job or newspaper. Placing a TIFF file in

the drop folder submits that TIFF for tagging, scheduling and printing. The tags now include

Photometric Interpretation information, such as positive or negative image, margin (white/black),

resolution, image size, and colors (e.g., CMYK). The Ctserver operator then generates separate TIFF files

for each color (CMYB plus) for each image. (In many cases the ECRM RIP system has already generated

separations.) These computer-to-printer processes are increasingly automated.

Additional, updated page-image files are often transmitted to the printers before and even during the

press run. These files incorporate corrections, updates, or even entire new elements, resulting in

variations in content within the same day’s edition. After printing, pages are combined with printed

advertising, comics or magazine supplements which are produced from a separate workflow managed

by specialty publishers and direct mail houses.

The same set of edition files used for printing is also transmitted to aggregators that publish the

facsimile editions, such as NewspaperDirect (PressDisplay), NewsStand (ProQuest), Active Paper Daily

11

CtServer software is used to send jobs to the plate-setter. It is also used to define the plate and pin bar

requirements for jobs and communicate that information to the plate-setter, which displays the information on the

control panel LCD. The use and operation of CtServer is documented in the CtServer User’s Guide. CtServer

“provides for setting up, monitoring and outputting jobs to ECRM imaging devices.” Users’ Guide is at

http://www.ecrm.com/uploads/support_documents/ag4508914.pdf

12

http://www.ecrm.com/newspaper/subcategory/RIP%20Upgrades/24/

CRL Mapping the Newspaper Industry 33 | Page

(Olive), and Image E-editions (NewsBank). Many newspapers then archive copies of the PDF files for

each section front (e.g., pages A1, B1, C1) in their digital asset management systems. Since these PDF

files are simple image files, however, they are absent much of the rich administrative, structural and

descriptive metadata associated with the various component parts of the newspaper issue applied by

providers and in the editorial process.

B.1.2.2 FORMATTING FOR TABLETS AND E- READERS

Many publishers also make versions of their news products available on e-readers and tablet computers

like Amazon’s Kindle and Apple’s iPad. Although the e-reader formats have an even greater degree of

functionality than the conventional electronic facsimiles, they normally begin with versions of the page

images much like those generated for print and e-facsimile publication. Editorial and pagination

systems, like NewsGate and NewsDesk, can create content for these special applications. Paginations

systems such as Adobe InDesign and CCI LayoutChamp use special templates for the particular device

formats to generate PDF page images and accompanying metadata. These page-image files with XML

metadata are then processed by various vendors for distribution on various e-reader and tablet

platforms.

The files are processed through special applications like Skiff and WoodWing in order to function on the

proprietary e-reader platforms used by Amazon’s Kindle, Barnes & Noble’s Nook, and Apple’s iPad, some

of which involve touch-screen functionality. While there is considerable variation in the respective

outputs of these platforms, the article files are richly embedded with codes and annotations that enable

a high level of functionality when viewed on the target devices.

Therefore, at this stage in the production process the content elements (PDF page images) will

have minimal metadata, most of which is information relating to the particular issue, section and

page of the print edition and the processing of the PDF file.

CRL Mapping the Newspaper Industry 34 | Page

B.3. ARCHIVING AND DIGITAL ASSET MANAGEMENT

Some content acquired or generated by local news organizations has value for potential reuse. In

particular, still images and video produced by staff photographers and by freelancers on assignment,

articles and columns written by local reporters and editors, and illustrations by staff artists constitute

“assets” or intellectual property considered by the news organization to be worth preserving over time.

These assets include both published and unpublished materials.

Originally such materials were kept in newspaper “morgues” or clippings files. Some newspapers even

maintained extensive back files of their published editions. Those paper files often contained materials

to which a newspaper owned publication rights as well as materials obtained for one-time or limited use

from reporters, photographers, syndicates, and other providers. Much of this infrastructure

disappeared as the newspaper industry downsized during the 1980s and 1990s, and as the locus of

many parts of the news operations shifted to the parent company.

In the 1970s and 80s, as newspapers introduced digital production methods, the amount of content a

newspaper accumulated became formidable, and the proliferation of potentially usable content in

multiple versions and multiple electronic formats began to present an enormous control challenge.

Digital asset management or “archives” systems were then developed for large-scale commercial use, to

provide the robust file and rights management capabilities necessary in the technology-driven media

environment. Initially these systems were created primarily to manage photographs. Today, with the

variety of media generated and managed by news organizations, and the “long tail” of value for digital

content, the management and repurposing of all types of digital assets for the multiple delivery

platforms (newspaper, magazine, radio, television, Web) under the control of the parent companies has

again become a central activity of news organizations.

CRL Mapping the Newspaper Industry 35 | Page

B.3.1 THE MAJOR DIGITAL ASSET MANAGEMENT SYSTEMS

Systems: Hermes Doc Center, Gannett Media Technologies’ DC5 Digital Collections, SCC Media Server,

SCC’s Merlyn, NewsBank’s SAVE, Nstein’s DAM.

The Arizona Republic uses DC5 Digital Collections, an archives system developed by Gannett Media

Technologies International, an affiliate of the Republic’s parent company. Typical of the enterprise-level

digital asset management systems, DC5 is a powerful system with the capability to store and index

multimedia objects and file types. This system manages the following Arizona Republic content:

• Published photographs with cut line

• PDF images of published front pages

• Associated Press wire photographs for which the Arizona Republic has publication rights

• XML marked up texts of published articles

• Raw texts of articles not yet marked up

Photos of all formats are converted to a baseline JPEG format on import to DC5. Arizona Republic staff

photographers normally make initial selections of photos from their assignments and upload them

directly to the system, where editors perform further selection, cropping and captioning. The Republic’s

NewsDesk editorial system integrates with DC5 to append publication metadata to the photo’s record,

which then is stored for the longer term in DC5.

SAVE is a widely used archiving and digital asset management system operated and hosted by

NewsBank. The system was originally developed to accommodate the texts of articles published by

newspapers, to serve as a type of virtual morgue, but now accommodates page image files, texts,

photographs, and graphic content.

Seattlepi.com and its parent company Hearst Corporation use Nstein’s DAM system, a “media hub” for

storing, repurposing and syndication of its text and rich media digital assets. DAM is an XML content

repository that automatically interacts with Nstein's TME text-mining engine system to semantically tag

newspaper articles with subject categories, and to thus enhance discoverability of content across all

Hearst newspaper sites.

CRL Mapping the Newspaper Industry 36 | Page

B.3.2 DIGITAL ASSET MANAGEMENT PROCESSES

Today many of these systems are used to store and manage all types of digital content, including stories

and other raw and published text, locally produced and wire photos, information graphics (ingested as

EPS files from vector software), third party-produced PDF documents, and PDF-format published

newspaper page images. Some of the formats accommodated by the major systems in use today

include:

• JPEG, TIFF, PSD, RAW and other photo formats

• EPS and native graphic formats like Illustrator™ or Freehand™

• PDF files with full-text indexing and searchable content

• Multimedia files such as QuickTime™, MP3, WAV, AVI

• Text in ascii, XML, SGML, and HTML

• Native file types from Quark, Adobe, Microsoft, and Macromedia software.

Local tagging of news content in the digital asset management system is often enhanced through third-

party semantic analysis, annotation and tagging by syndicates and third parties. Wire services like

Reuters and Associated Press provide this kind of data tagging for client news organizations. Many AP

members submit their news text, audio and image content for tagging through AP’s Content Enrichment

Initiative. And Reuters’ Open Calais service also provides rich subject and name analysis and tagging

and annotation of content files in all media.

Photographs chosen for publication are usually edited and formatted in the DAM system. The DC5

system, used by Gannett newspapers, generates multiple versions of the same image:

1. A low-resolution JPEG used only for page layout (known as “FPO” or “for position only.”) This is

what a layout editor sees in the Layout Champ pagination program.

2. A high-resolution JPEG that is sent to a color-correction or “optimization” lab. This image is

converted from RGB to CYMK, and settings are applied that ensure optimum print quality on the

local presses, and then is later inserted in the page image files sent to the printer.

3. The original high-resolution RGB image (JPEG) that is the archival version.

CRL Mapping the Newspaper Industry 37 | Page

Some archives systems, such as SAVE and SCC Media Server also feature some minimal text editing

capabilities as well, and can generate output files (originally prepared in pagination systems) directly to

printers and other content vendors including Factiva, NewsBank, and Lexis-Nexis.

In some instances two digital asset management systems are used in tandem. The Wisconsin State

Journal uses Merlin as its local archiving system for high-resolution published images after editing and

enhancing, and the SAVE system for text and as a generator of content for aggregators.

Unlike the morgues of the past, however, digital content management systems normally only hold

content for which a publisher either owns rights or has licensed rights for extended periods of time.

Blog posts and other user-generated content, for example, are not archived by the newspapers in the

digital asset management systems, because they are the intellectual property of the post writer.

Uploaded through a Web interface, blog posts are archived in the native blog platform, like WordPress,

but are rarely captured for long-term retention.

Archive systems usually provide greater storage capacity and functionality for managing image and

multimedia files than editorial systems. This is the point in the news lifecycle where the most heavily

annotated and tightly controlled content files reside. Media files and article texts stored in these

systems are richly tagged with granular metadata relating to production date, authorship, provenance,

version, usage and resale rights, publication history, expiration dates, related news objects, and so forth.

The formats for such metadata generally follow the IPTC standards and vocabularies, or employ system-

specific or locally relevant tags mapped to same. Published stories and accompanying images, although

filed separately, can be linked to each other through this metadata and to corresponding page images.

And published images can be linked to outtakes from the same assignment.

B.3.3 CENTRALIZATION AND OUTSOURCING OF ASSET MANAGEMENT AND PROCESSING

Developments in the news industry are fueling a growing tendency for large media organizations to

manage their digital assets on an enterprise-wide basis. With the consolidation in the news industry,

content produced by local newspapers is shared with other news organizations under the same parent

company. Photographs by Arizona Republic staff photographers, for example, are maintained under the

control of Gannett, the Republic’s parent company, in its own “enterprise-wide” digital asset

management system. With media convergence news stories, photographs, video, audio, and other

content can be versioned for several media platforms under the local newspaper or parent’s control.

CRL Mapping the Newspaper Industry 38 | Page

Because of the heavy storage demands imposed on these systems by multimedia files, even much

enterprise news content is now not actually maintained locally. The New York Times, for example, uses

Amazon’s S3 service, a “cloud” data center, to store its photographs. In some instances even the actual

management of a newspaper’s content is outsourced. Many of the DAM systems can be either licensed

and integrated locally with a newspaper’s IT environment or deployed on a “software-as-a-service”

basis, hosted by the system’s producer. And content archived in the SAVE system for many newspapers

is hosted by NewsBank.

Some systems rely on third-party service providers for subject tagging and indexing of content held in

their own digital content management systems. SCC MediaServer accommodates the use of Thomson

Reuters’ Open Calais service for semantic analysis and tagging of Hearst newspapers and magazine’s

archived content. (Open Calais can be used either as an on-site application or as a hosted solution

managed by Reuters.)

CRL Mapping the Newspaper Industry 39 | Page

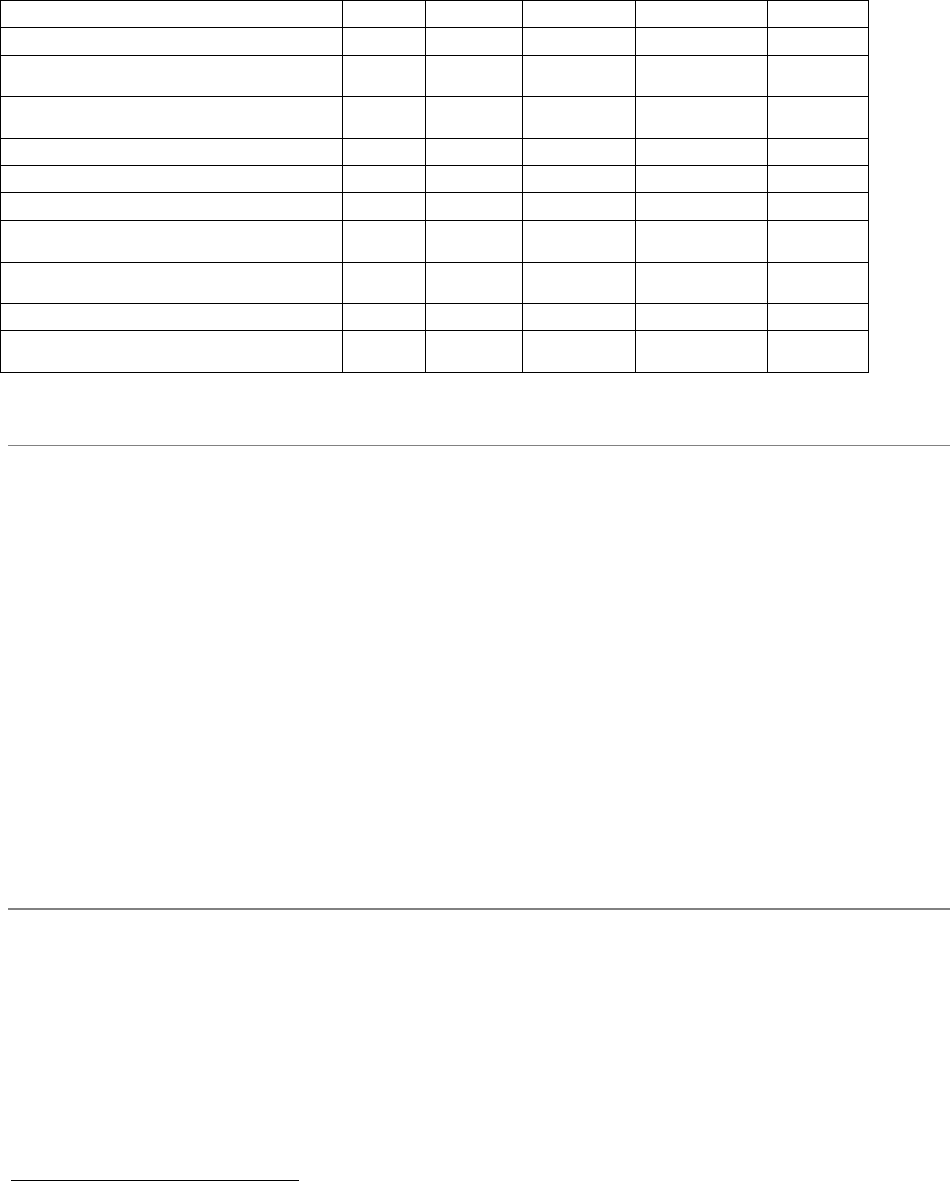

C. DISTRIBUTING NEWS CONTENT

Most newspaper publishers now distribute their news content both in print and electronically. They

output electronic page image files for print editions and for republication as electronic facsimiles. The

facsimiles are sometimes available on the publisher’s own Web platform, but more often are

republished by aggregators like ProQuest, Tecnavia and NewspaperDirect. The publishers also generate

electronic text feeds of their locally produced news content for re-aggregation in commercial news

Content/data:

• Articles, feature texts

• Graphics

• Photographs

• Audio recordings

• Video recordings

• Tables

• Databases

• Data visualizations

• News object metadata

• Web design templates

• Style sheets

• User data

• Algorithms and programming code

Actors:

• Newspaper publishers (Arizona Republic, Seattle Post Intelligencer, the Tribune Company, Wisconsin State

Journal)

• Parent organizations (Gannett, Hearst Newspapers, Tribune Media)

• Affiliated newspapers and news organizations (print, broadcast)

• Text aggregators (LexisNexis, NewsBank, Bloomberg)

• E-facsimile aggregators (Olive, NewspaperDirect, Tecnavia)

• Micropublishers and e-publishers (ProQuest, Gale, NewsBank)

• Search engines and news reader services (Google, FastTrack News, News Rover)

• Wire services, syndicates and photo agencies

• Image processing services

• Printers

• Bloggers

• Social media platforms (FaceBook, Twitter)

• Libraries

• Indexing services (Reuters, Nstein)

• API programmers

•

Ad-servers (AdTech, Yahoo!)

CRL Mapping the Newspaper Industry 40 | Page

databases like LexisNexis, and for syndication through affiliated organizations like the Associated Press.

Finally, newspapers generate formatted text and multimedia content that is disseminated through their

own Web platforms and through a variety of mobile devices such as cell phones, PDAs and tablet

computers.

Printing newspapers, for all but a few publishers, is still largely a local operation. But most distribution

activities are now managed at the parent company level. Or they rely on specialized service providers

such as YouTube, WordPress and others operating at the national or global level. The early steps in the

preparation of text, data and still image content for these distribution channels are taken in the editorial

system. But content destined for Web and mobile platforms soon parts company with versions of the

same content for print and aggregator distribution. Publishers use a common, in-house work stream to

produce inputs for “typesetting” and production of print and electronic facsimile editions. The

production streams for Web site, mobile device, and other electronic content diverge from the print and

electronic facsimile workflows in the editorial system.

CRL Mapping the Newspaper Industry 41 | Page

C.1 PRINT DISTRIBUTION

Print newspapers follow a long-established distribution model that has not changed significantly in over

a century. Print newspapers are sold by the issue to consumers by retail vendors; on a subscription basis

directly to households and businesses; and to institutions (including libraries) indirectly through

subscription services. Delivery to subscribers is through carriers under the auspices of the newspaper

publisher or a sub-contractor, and by mail. Some newspapers are given away free and are largely

supported by advertising.

In the past libraries have represented a secondary “market” for printed newspapers, but have provided

a useful service for news publishers. Libraries have served as repositories for the long-term safekeeping

of newspaper back files. Local libraries and historical societies have systematically acquired local

newspapers for their current awareness and historical value. And the Library of Congress (like other

national libraries) has been able to amass substantial newspaper holdings from publisher deposits of

newspapers with the U.S. Copyright Office.

CRL Mapping the Newspaper Industry 42 | Page

In the past libraries have not only stored and provided access to newspaper back files, but have invested

a great deal in indexing and bibliographic analysis of the newspapers as well. Hundreds of local and

state historical societies and state and academic libraries have created detailed indexes and “clippings

files” that identified locally important or special interest content in those publications. These

stewardship activities formed the foundation upon which major preservation and access programs like

the NEH’s long-running United States Newspaper Project was built.

Given the bulk and fragility of aging newspapers and the resource-intensive nature of the indexing and

cataloging work, these services had a significant cost. For a long time that cost was underwritten by

local, federal and philanthropic grant making, as a public good. Commercial microform publishers have

played an important role in serving this secondary, research market as well, generating revenue from

the sale of microfilm and microfiche copies of the newspapers under license from the publishers. Some

micropublishers have employed the same model to distribute page images of the same newspapers in

digital form.

The US Copyright Law affords libraries certain rights to make copies of the newspapers available for

study, research and other non-commercial purposes. Publishers have also granted micropublishers the